Command Palette

Search for a command to run...

PubMedVision Medical Multimodal Evaluation Dataset

Date

Size

Paper URL

License

Apache 2.0

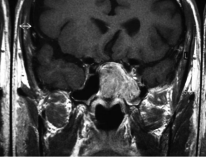

PubMedVision is a dataset for medical multimodal capability assessment released in 2024 by Shenzhen Big Data Research Institute, Chinese University of Hong Kong (Shenzhen), and National Institute of Healthcare Big Data (Shenzhen). The related paper results are "HuatuoGPT-Vision, Towards Injecting Medical Visual Knowledge into Multimodal LLMs at Scale", which aims to provide standardized testing resources for multimodal large language models (MLLMs) in medical vision-text understanding tasks to test their visual knowledge fusion and reasoning performance in the medical field.

This dataset contains approximately 1.3 million medical visual question answering (VQA) examples, including 647,031 aligned VQA examples and another 647,031 instruction-based fine-tuning VQA examples. The data is constructed from 914,960 carefully curated medical images and their accompanying context (such as titles and in-text citations), covering a variety of medical imaging modalities and anatomical regions. Each example consists of an image and explanatory text from a medical paper. Multimodal large language models (such as GPT-4V) are used to generate the corresponding image description, question, and answer.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.