Command Palette

Search for a command to run...

Ditto-1M instruction-driven Video Editing Dataset

Date

Paper URL

License

Non-Commercial

Ditto-1M is a command-driven video editing dataset released in 2025 by the Hong Kong University of Science and Technology, Ant Group, Zhejiang University and other institutions. The related paper results are "Scaling Instruction-Based Video Editing with a High-Quality Synthetic Dataset", which aims to promote the development of video editing models based on natural language instructions, and improve the model's understanding of complex instructions and the accuracy of video generation through large-scale, high-quality synthetic samples.

This dataset contains approximately 1,000,000 high-fidelity video editing triples, each consisting of a source video, an editing instruction, and the edited video. Each video has an average of 101 frames and a resolution of 1,280×720. The editing tasks are divided into three categories:

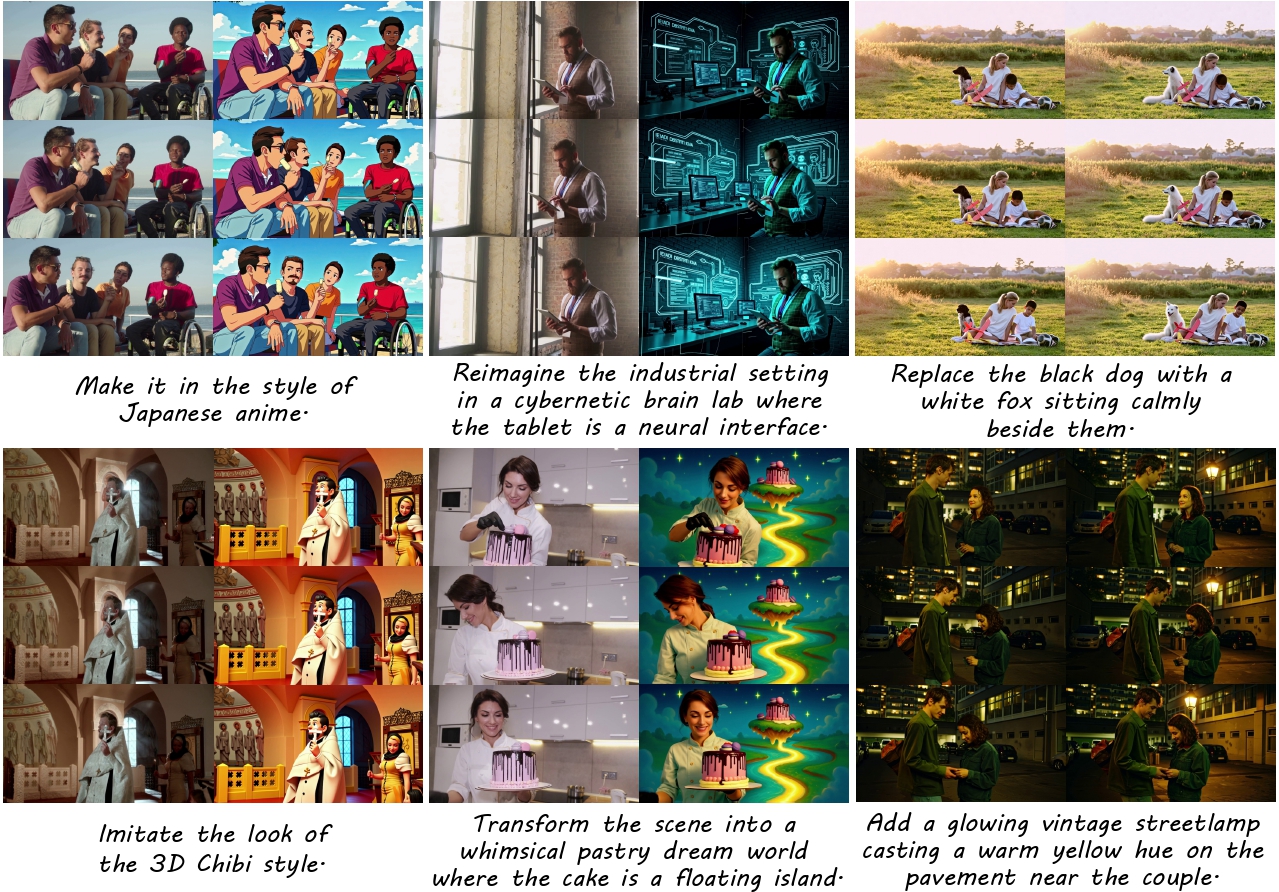

- Global style transfer: including artistic style changes, color grading, visual effects, etc.

- Global freeform editing: including complex scene modifications, environmental changes, creative transformations, etc.

- Local editing: includes precise object modification, attribute changes, local adjustments, etc.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.