Command Palette

Search for a command to run...

GroundingME Complex Scene Understanding Evaluation Dataset

Date

Paper URL

License

Other

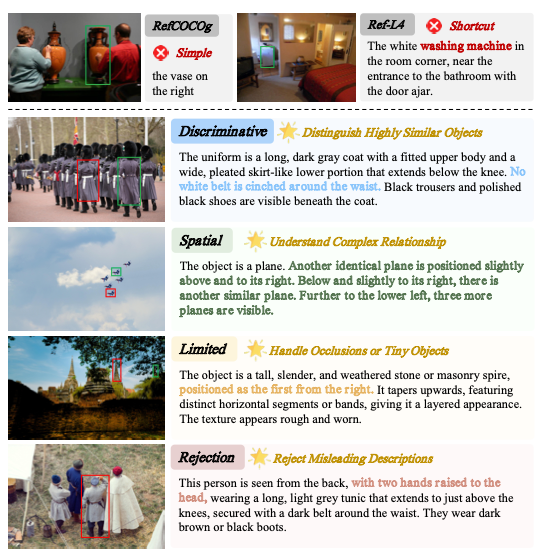

GroundingME is a visual reference evaluation dataset for multimodal large language models (MLLMs), released in 2025 by Tsinghua University in collaboration with Xiaomi and the University of Hong Kong, among other institutions. Related research papers include... GroundingME: Exposing the Visual Grounding Gap in MLLMs through Multi-Dimensional EvaluationThe aim is to systematically evaluate the model's ability to accurately map natural language to visual targets in real-world complex scenarios, with particular attention to understanding and safety performance in situations involving ambiguous references, complex spatial relationships, small targets, occlusion, and unreferentiality.

This dataset contains 1,005 evaluation samples. The images are sourced from two high-quality datasets, SA-1B and HR-Bench, and only the original images were used to construct the tasks to avoid data contamination. The samples cover four primary task categories: discriminative reference (204 samples, 20.31 TP3T), spatial relationship understanding (300 samples, 29.91 TP3T), restricted visibility scenes (300 samples, 29.91 TP3T), and non-referential rejection task (201 samples, 20.01 TP3T), further subdivided into 12 secondary sub-tasks with a balanced overall distribution. The dataset involves 241 real-world object classes. There are a large number of objects of the same class in a single image, and object instances usually occupy a small proportion of the image. The length of the language descriptions is significantly longer than existing reference datasets, significantly increasing the difficulty of visual reference tasks from multiple dimensions.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.