Command Palette

Search for a command to run...

AI Paper Weekly Report | Red Team Testing of Language Models / Multi-View 3D Point Tracking Methods / Protein Representation Learning Framework / New Cryptography Vulnerability Detection Framework...

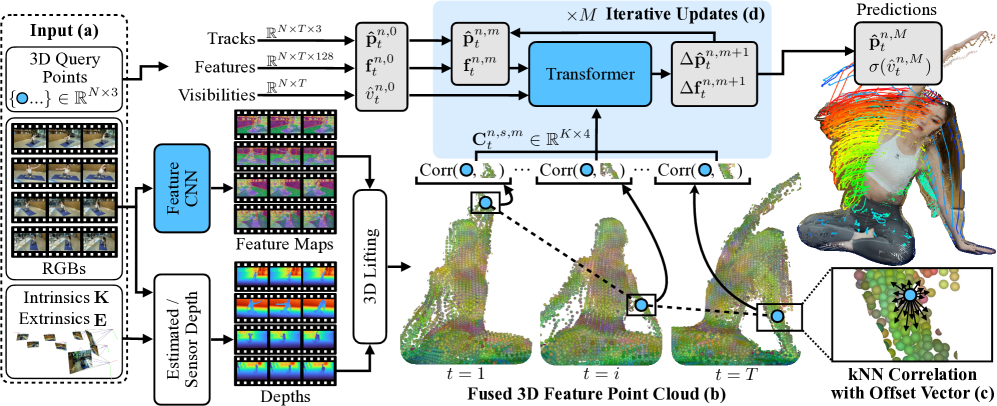

In recent years, several methods have attempted to achieve 3D point tracking from monocular videos. However, due to the difficulty in accurately estimating 3D information in challenging scenarios such as occlusion and complex motion, the performance of these methods still cannot meet the high precision and robustness requirements of practical applications.

Based on this, ETH Zurich and Carnegie Mellon University jointly proposed the first data-driven multi-view 3D point tracking method, which aims to track arbitrary points in dynamic scenes using multiple camera perspectives. This method's feed-forward model directly predicts corresponding 3D points using only a small number of cameras, enabling robust and accurate online tracking.

Paper link:https://go.hyper.ai/2BSGR

Latest AI Papers:https://go.hyper.ai/hzChC

In order to let more users know the latest developments in the field of artificial intelligence in academia, HyperAI's official website (hyper.ai) has now launched a "Latest Papers" section, which updates cutting-edge AI research papers every day.Here are 5 popular AI papers we recommendAt the same time, we have also summarized the mind map of the paper structure for everyone. Let’s take a quick look at this week’s AI cutting-edge achievements⬇️

This week's paper recommendation

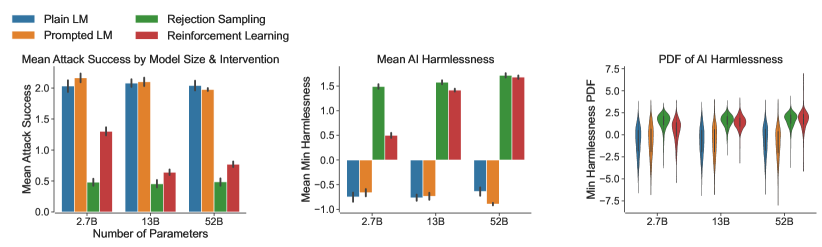

1. Red Teaming Language Models to Reduce Harms: Methods, Scaling Behaviors, and Lessons Learned

This paper describes early work exploring red team testing of language models, aiming to simultaneously identify, measure, and mitigate potentially harmful model outputs. The study found that red team testing difficulty for RLHF models increases significantly with scale, while other model types exhibit no significant scaling trends. The paper also publicly releases a dataset of 38,961 red team attack samples and describes the instruction design, execution process, statistical methods, and associated uncertainty factors used in red team testing.

Paper link:https://go.hyper.ai/j2U2u

2. Multi-View 3D Point Tracking

This paper proposes the first data-driven multi-view 3D point tracking method, designed to track arbitrary points in dynamic scenes using multiple camera views. The method demonstrates good generalization across a wide range of video scenarios, from 1 to 8 views, at varying observation angles, and with frame lengths ranging from 24 to 150.

Paper link:https://go.hyper.ai/2BSGR

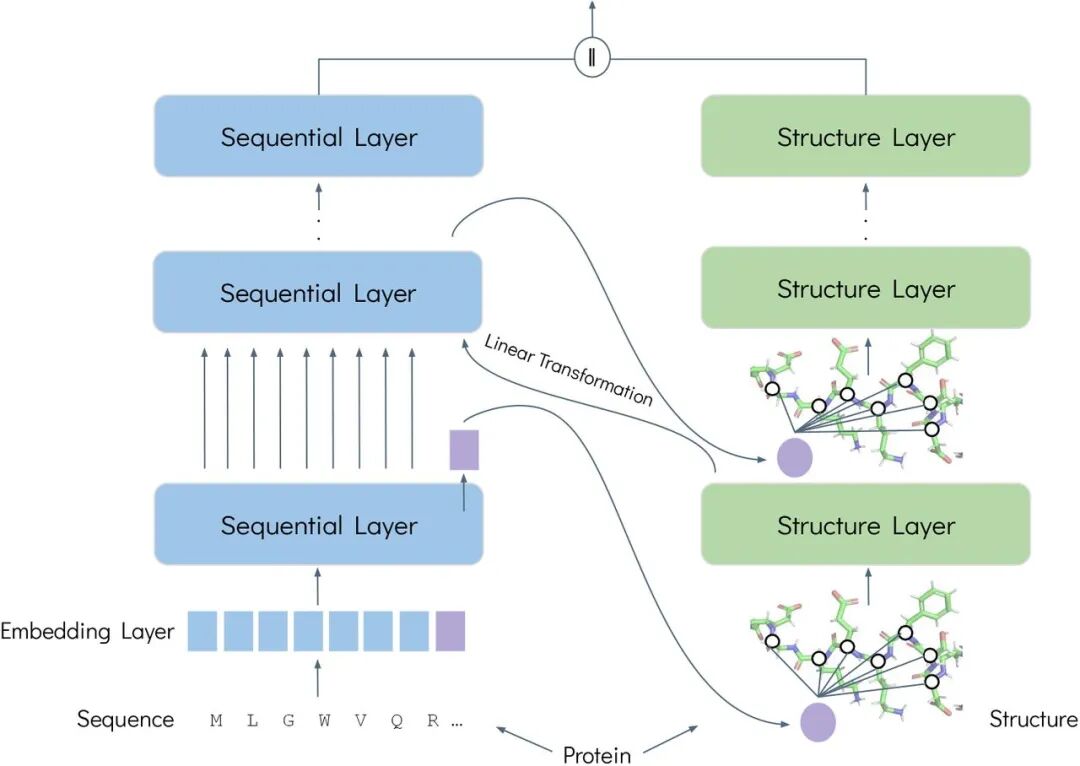

3. FusionProt: Fusing Sequence and Structural Information for Unified Protein Representation Learning

This paper proposes a novel protein representation learning framework, FusionProt, which aims to simultaneously learn a unified representation of a protein's one-dimensional sequence and three-dimensional structure. FusionProt introduces an innovative learnable fusion tag as an adaptive bridge, enabling iterative information exchange between protein language models and protein three-dimensional structure graphs.

Paper link:https://go.hyper.ai/rjbaU

4. Why Language Models Hallucinate

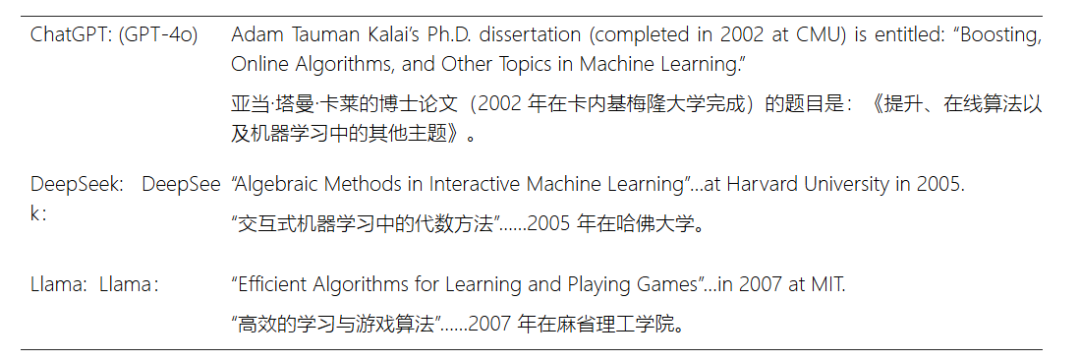

This paper proposes that the fundamental reason language models experience hallucinations is that their training and evaluation mechanisms tend to reward guessing rather than acknowledge uncertainty. It further analyzes the statistical roots of hallucinations in modern training processes. Hallucinations persist because, in most evaluation methods, language models are optimized to be "excellent test takers," and guessing under uncertainty actually improves test performance. This systematic penalty for uncertain answers suggests that current, biased, scoring methods in mainstream benchmarks should be revised, rather than introducing additional metrics to evaluate hallucinations.

Paper link:https://go.hyper.ai/7TIjt

5. CryptoScope: Utilizing Large Language Models for Automated Cryptographic Logic Vulnerability Detection

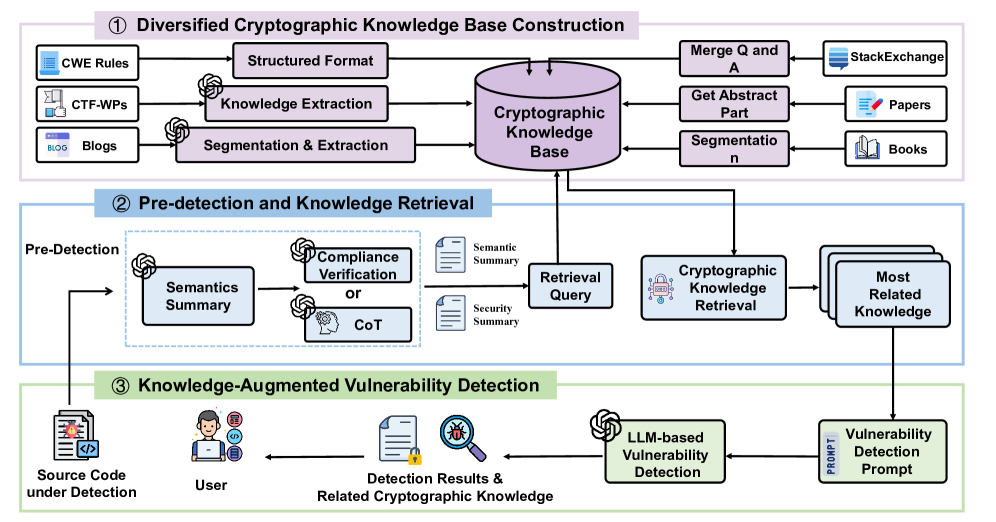

This paper proposes a new LLM-based automated cryptography vulnerability detection framework, CryptoScope, which combines the Chain of Thought (CoT) hinting technique with Retrieval-Augmented Generation (RAG) and relies on a carefully curated cryptography knowledge base containing more than 12,000 entries.

Paper link:https://go.hyper.ai/qkboy

The above is all the content of this week’s paper recommendation. For more cutting-edge AI research papers, please visit the “Latest Papers” section of hyper.ai’s official website.

We also welcome research teams to submit high-quality results and papers to us. Those interested can add the NeuroStar WeChat (WeChat ID: Hyperai01).

See you next week!