Command Palette

Search for a command to run...

IJCAI 2019 Papers: Chinese Teams Account for 38%, Peking University and Nanjing University Are on the List

IJCAI 2019, the top AI conference, came to a successful conclusion on August 16. During the seven-day technical event, participants learned about the application scenarios of AI technology in various fields in the workshop, listened to the keynote speeches of AI predecessors, and had the opportunity to learn about the history of AI development and the latest progress and trends in the roundtable. In addition, the papers included in the conference are undoubtedly the most popular content. We have specially sorted out a number of selected papers by field to share with you.

Top AI Conferences IJCAI 2019 It was held in Macau, China from August 10th to August 16th and concluded successfully.

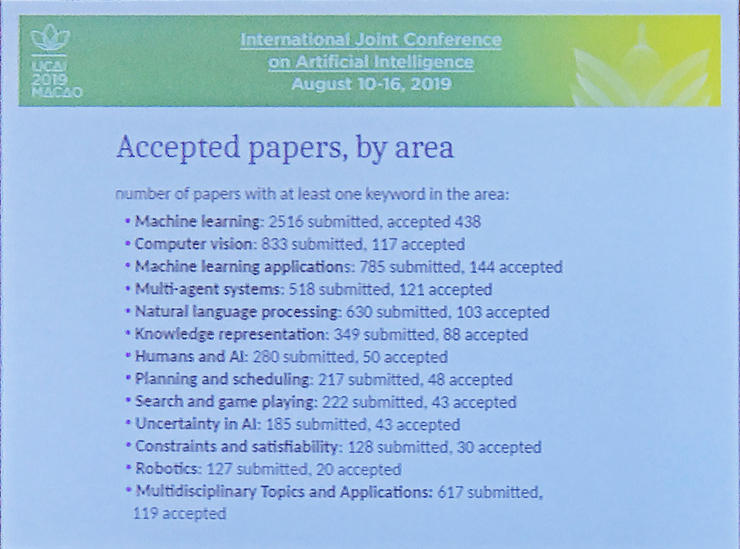

At the opening ceremony on August 13, the organizers reviewed the papers collected at the conference. Thomas Eiter, the chairman of the conference, announced the following information: This year's IJCAI received a total of 4,752 paper submissions, and the final number of collected papers reached a record high. 850 articles,The acceptance rate is 17.9%.

Then, Sarit Kraus, the chair of the conference program committee, gave a detailed explanation of the papers. Compared with the 3,470 papers included last year, this year's growth rate is 37%. Among the 850 papers included,There are 327 articles from China, accounting for 38%.

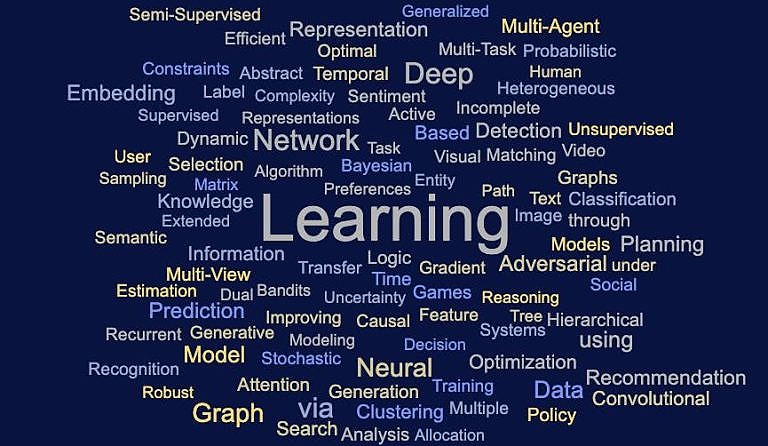

On the topic of the paper,Machine learning is still the hottest field.The number of included articles is 438, more than half,In addition, the field with the largest number of papersThey are computer vision, machine learning applications, and natural language processing.

This year, a total of 73 area chairs, 740 senior program committee members, and 2,696 program committee members participated in the paper review. What are the outstanding papers reviewed by them?

One of a kind award-winning paper

IJCAI 2019 selected one paper from 850 papersOutstanding Papers(Distinguished Paper):

Abstract: The authors studied aClassification problems based on contrast conditions.This problem is generally like this: given a set, we can only get triple information, which is the comparison of three targets. For example, if the distance from x_i to x_j is smaller than the distance from x_i to x_k, how can we classify x_i? In this paper, the researchers proposed a method called TripletBoostThe main idea of the paper is that the distance information brought by the triples can be input into a weak classifier, which can be gradually improved to a strong classifier in a serial manner.

Such methods includeTwo advantages:First of all, this method can be applied in various matrix spaces. In addition, this method can solve the triple information that can only be obtained passively or is noisy in many fields. The researchers theoretically verified the feasibility of this method in the paper and proposed a lower limit on the number of triplets that need to be obtained. Through experiments, they said that this method is better than existing methods and can better resist noise.

Obtain IJCAI-JAIR Best PaperThe Best Paper is:

Note: This award is given to papers published in JAIR within the past five years.

Abstract: The paper states that the most typical NP-complete problem, Boolean satisfiability (SAT) and its PSPACE-complete generalization, Quantified Boolean Satisfiability (QAT), are the core of the declarative programming paradigm.It can efficiently solve various real-world instances of computationally complex problems.Success in this field is achieved through SAT and QSAT The paper is based on a breakthrough in the practical implementation of decision procedures, namely SAT and QSAT solvers. In this paper, the researchers develop and analyze a clause elimination procedure for pre- and post-processing. The clause elimination procedure forms a set of (P)CNF formal simplification techniques, so that clauses with certain redundant properties can be removed in polynomial time while maintaining the satisfiability of the formula.

In addition to these award-winning papers, as one of the hottest top conferences in the field of artificial intelligence, IJCAI has ranked among the top conferences in terms of the number of paper submissions and acceptances over the years, and there are many excellent papers produced there.

Therefore, Super Neuro from this IJCAI conferenceHot 3In each issue, we select one or two selected papers and give a brief introduction, so as to get a glimpse of the overall picture of IJCAI.

Hottest Field Hot 1: Machine Learning

Selected Papers in Machine Learning 1

Abstract: The open-ended video question answering task is to automatically generate text answers from reference video content based on given questions.

Currently, existing methods often adopt multimodal recurrent encoder-decoder networks, but they lack long-term dependency modeling, which makes them ineffective in long video question answering.

To solve this problem, the authors proposed aFast Hierarchical Convolutional Self-Attention Encoder-Decoder Network (HCSA) .Using a self-attention encoder with layered convolutions,Efficiently model long video content.

HCSA builds a hierarchical structure of video sequences and captures question-aware long-term dependencies from the video context. In addition, a multi-scale attention decoder is designed that fuses multiple layers of representation to generate answers, avoiding information loss in the top encoding layers.

Experimental results show that this method performs well on multiple datasets.

Selected Papers in Machine Learning 2

Abstract: The application of machine learning is often limited by the amount of effective labeled data, and semi-supervised learning can effectively solve this problem.

This paper proposes a simple and effective semi-supervised learning algorithm——Interpolation consistency training(Interpolation Consistency Training, ICT).

ICT makes the interpolated predictions of unlabeled points consistent with the interpolated predictions of these points.In classification problems, ICT moves the decision boundary to a low-density region of the data distribution. It uses almost no additional computation and does not require training a generative model, and even without extensive hyperparameter tuning, it performs well when applied to standard neural network architectures on the CIFAR-10 and SVHN benchmark datasets.Achieved state-of-the-art performance.

Hottest Field Hot 2: Computer Vision

Selected Papers in Computer Vision 1

Abstract: Features from multiple scales can greatly help the semantic edge detection task, however, prevalent semantic edge detection methods apply a fixed weight fusion strategy, where images with different semantics are forced to share the same weights, leading to universal fusion weights for all images and locations regardless of their different semantics or local contexts.

This work proposes aNovel dynamic feature fusion strategy,Adaptively assign different fusion weights to different input images and locations. This is achieved by the proposed weight learner to infer appropriate fusion weights for a specific input with multi-level features at each location of the feature map.

In this way, the heterogeneity of contributions made by different locations of the feature maps and input images can be better accounted for, thus facilitating the generation ofMore accurate and sharper edge predictions.

Selected Papers in Computer Vision 2

Abstract: Monocular depth estimation is an important task in scene understanding. Objects and their underlying structures in complex scenes are crucial for accurately recovering depth maps with good visual quality. Global structures reflect scene layout, while local structures reflect shape details. CNN-based depth estimation methods developed in recent years have significantly improved the performance of depth estimation. However, few of them consider multi-scale structures in complex scenes.

This paper proposes aStructure-aware residual pyramid network for deep precision prediction using multi-scale structures(SARPN), a residual pyramid decoder (RPD) is also proposed, which represents the global scene structure in the upper layer to represent the layout, and the local structure in the lower layer to represent the shape details; at each layer, a residual refinement module (RRM) of the predicted residual map is proposed to gradually add finer structures on the coarse structure predicted in the upper layer; in order to make full use of the features of multi-scale images, an adaptive dense feature fusion (ADFF) module is proposed, which adaptively fuses the effective features of each scale for reasoning about the structure of each scale. Experimental results on the NYU-Depth v2 dataset show that the method proposed in this paper has achieved state-of-the-art performance in both qualitative and quantitative evaluation.The precision reached 0.749, the recall rate reached 0.554, and the F1 score reached 0.630.

Hottest Field Hot 3: Natural Language Processing (NLP)

NLP Selected Papers 1

Abstract: Recurrent neural networks (RNNs) are widely used in the field of natural language processing (NLP), including text classification, question answering, and machine translation. Usually, RNNs can only review from beginning to end and have poor ability to process long texts. In text classification tasks, a large number of words in long documents are irrelevant and can be skipped. In response to this situation, the author of this paper proposesEnhanced LSTM:Leap-LSTM.

Leap-LSTM can read textJump between words dynamically.In each step, Leap-LSTM uses several feature encoders to extract information from the previous text, the following text, and the current word, and then decides whether to skip the current word. On five benchmark datasets including AGNews, DBPedia, Yelp F. Yelp P., and Yahoo,The prediction effect of Leap-LSTM is higher than that of standard LSTM, and Leap-LSTM has a higher reading speed.

NLP Selected Papers 2

Abstract: This paper studiesEntity alignment problem based on knowledge graph embedding.Previous works mainly focus on the relational structure of entities, and some further incorporate other types of features, such as attributes, for refinement.

However, a large number of entity features are still not treated equally, which harms the accuracy and robustness of embedding-based entity alignment.

This paper proposes a new framework.It unifies multiple views of entities to learn entity-aligned embeddings.Specifically, this paper uses several combined strategies to embed entities based on views of entity names, relations, and attributes.

In addition, the paper designs some cross-knowledge graph reasoning methods to enhance the alignment between two knowledge graphs. The experiments on real datasets show that the performance of the framework is significantly better than the state-of-the-art embedding-based entity alignment methods. The selected views, cross-knowledge graph reasoning, and combination strategies all contribute to the performance improvement.

-- over--