Command Palette

Search for a command to run...

OminiControl Multifunctional Image Generation and Control

Date

Size

562.43 MB

GitHub

Paper URL

1. Tutorial Introduction

OminiControl, released in December 2024 by the xML Lab at the National University of Singapore, is a minimal yet powerful general-purpose control framework suitable for Diffusion Transformer models such as FLUX. Users can create their own OminiControl models by customizing any control task (3D, multi-view, gesture guidance, etc.) using FLUX models. Related research papers are available. OminiControl: Minimal and Universal Control for Diffusion Transformer .

Universal Control 🌐 : A unified control framework that supports both agent-driven control and spatial control (e.g., edge-guided and in-painting generation).

Minimal design 🚀 : Inject control signals while retaining the original model structure. Only additional parameters of 0.1% are introduced to the basic model.

This tutorial is based on the OminiControl universal control framework, which implements the theme-driven generation and spatial control of images. The computing resource uses a single card A6000.

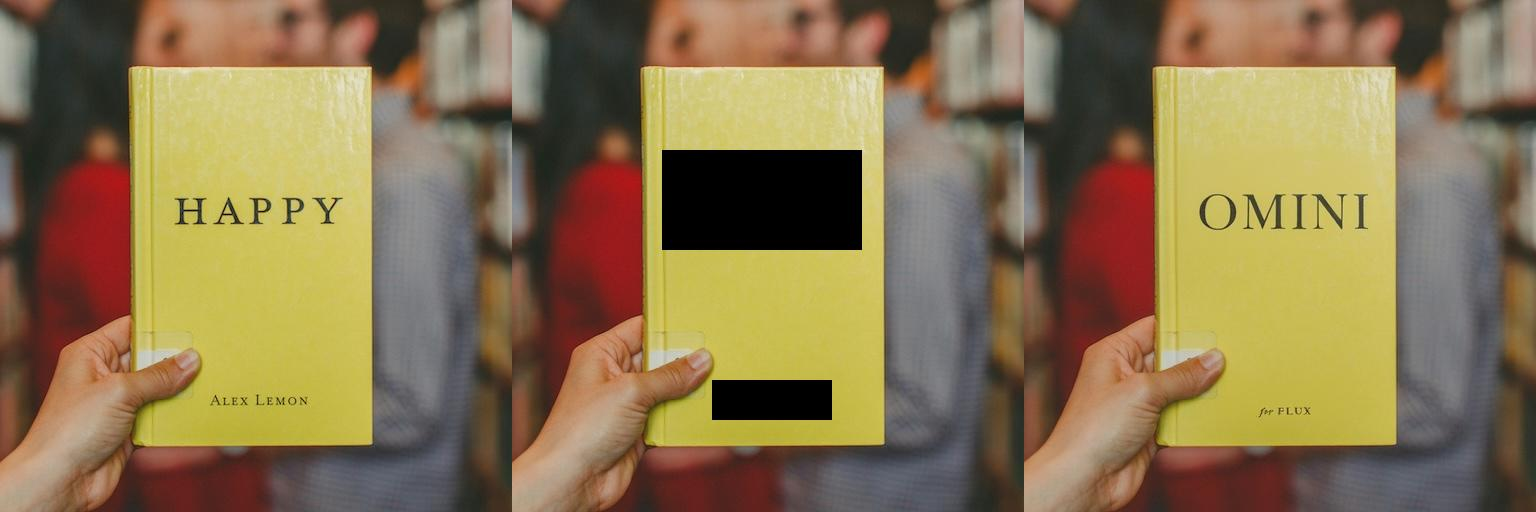

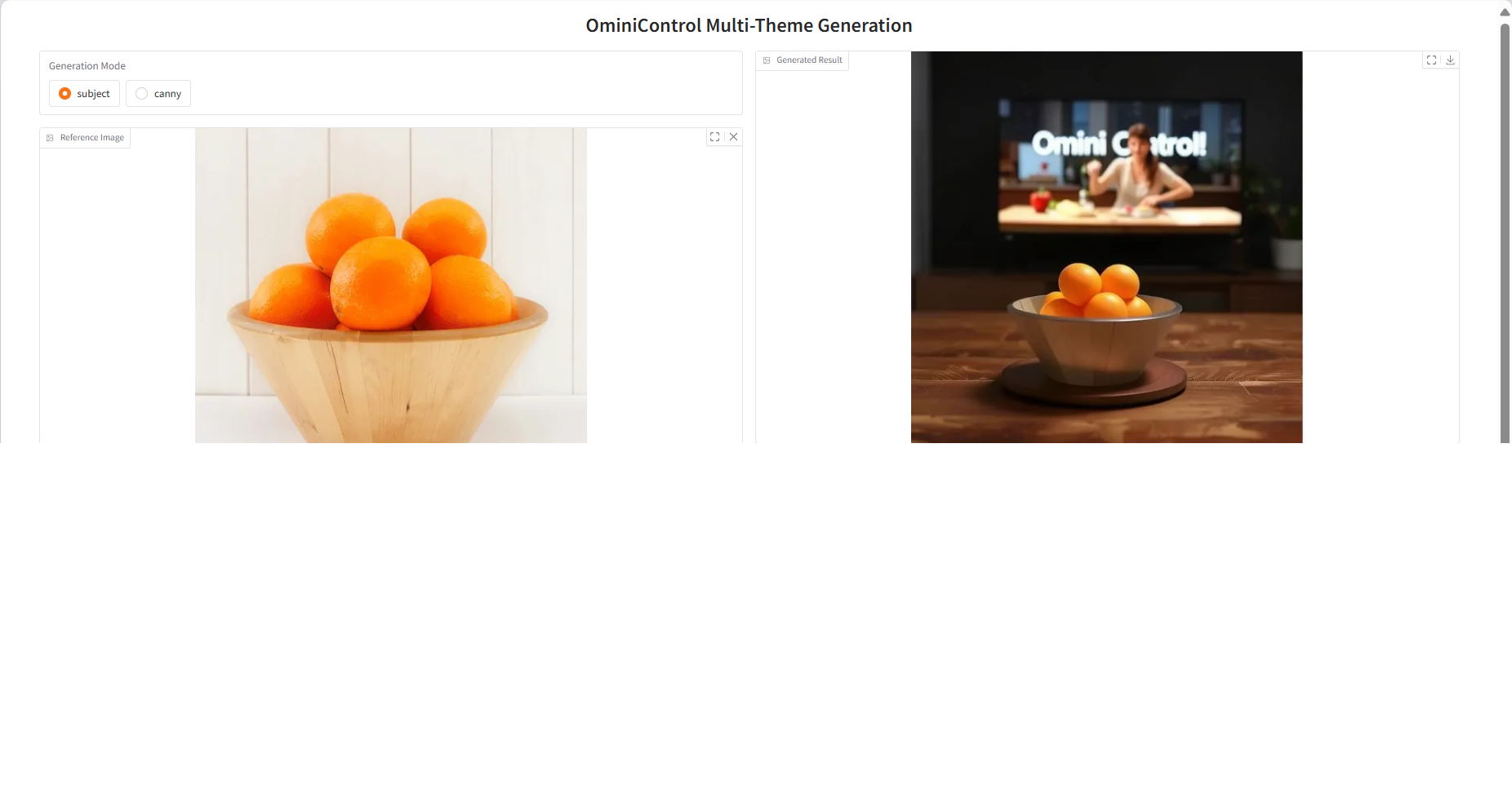

Effect examples

1. Theme-driven generation

Demo(Left: Conditional image; Right: Generated image)

Text prompt word

- Tip 1:Close-up showing the object. It is placed on a wooden table, with a dark room in the background, a television on and a cooking show playing, and the words "Omini Control!" on the screen.

- Hint 2:Cinematic style shot. On the lunar surface, the object is driving on the lunar surface with a flag with the word "Omini" on the body. In the background is the huge Earth occupying the foreground.

- Hint 3:In the Bauhaus-style room, objects are placed on a shiny glass table next to a vase filled with flowers. In the afternoon sun, the shadows of the blinds are cast on the wall.

- Hint 4:A woman wearing this shirt and a big smile sits under an "Omini" umbrella on the beach with a surfboard behind her. The orange and purple sky at sunset is in the background.

2. Spatial alignment control

Image Inpainting(Left: original image; Middle: mask image; Right: filling result)

- Tips:Mona Lisa is wearing a white VR headset with the word "Omini" printed on it.

- Tips:The yellow book cover has the word "OMINI" printed in large fonts, and the text "for FLUX" appears at the bottom.

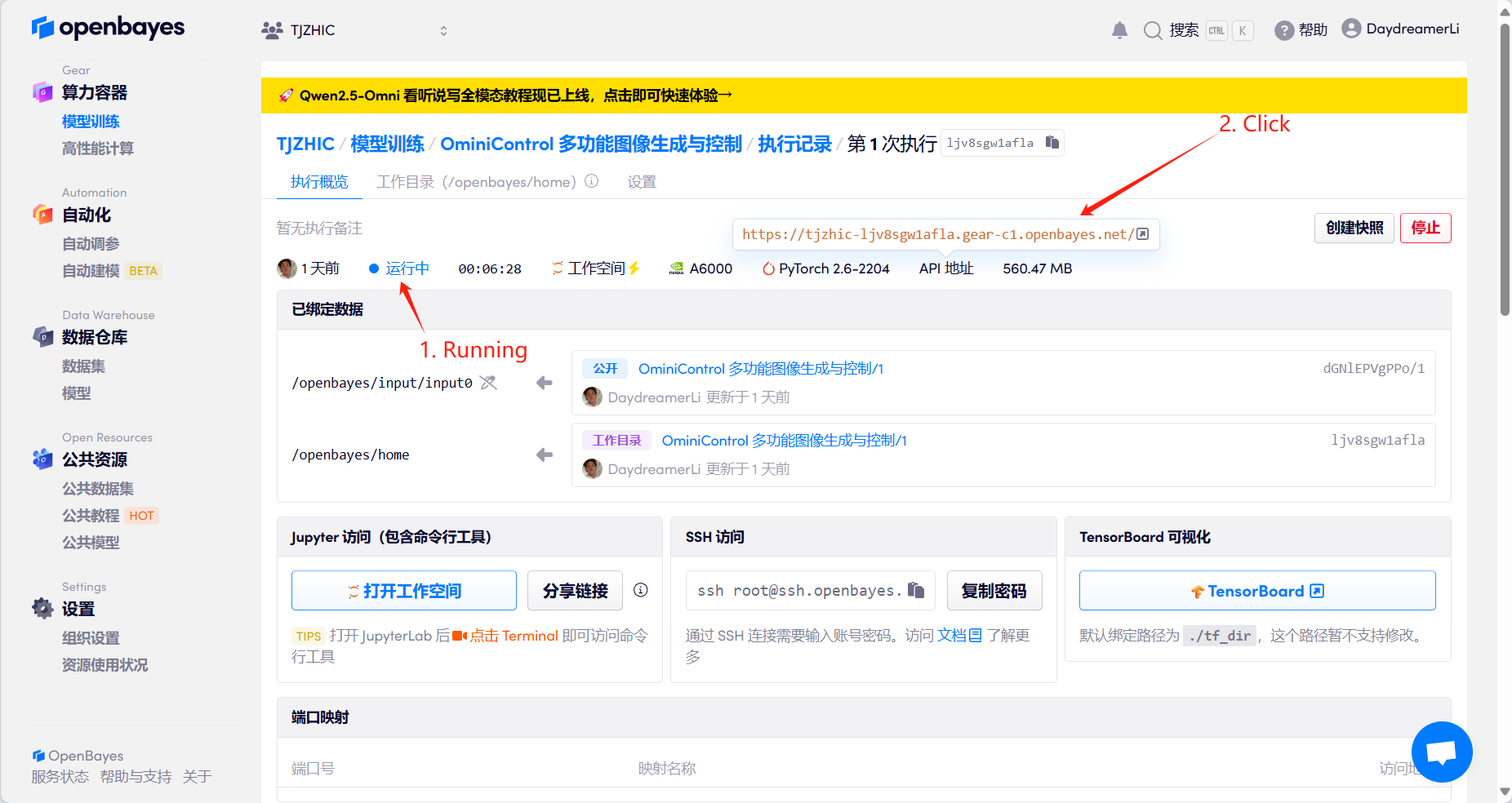

2. Operation steps

If "Model" is not displayed, it means the model is being initialized. Since the model is large, please wait about 1-2 minutes and refresh the page.

1. After starting the container, click the API address to enter the Web interface

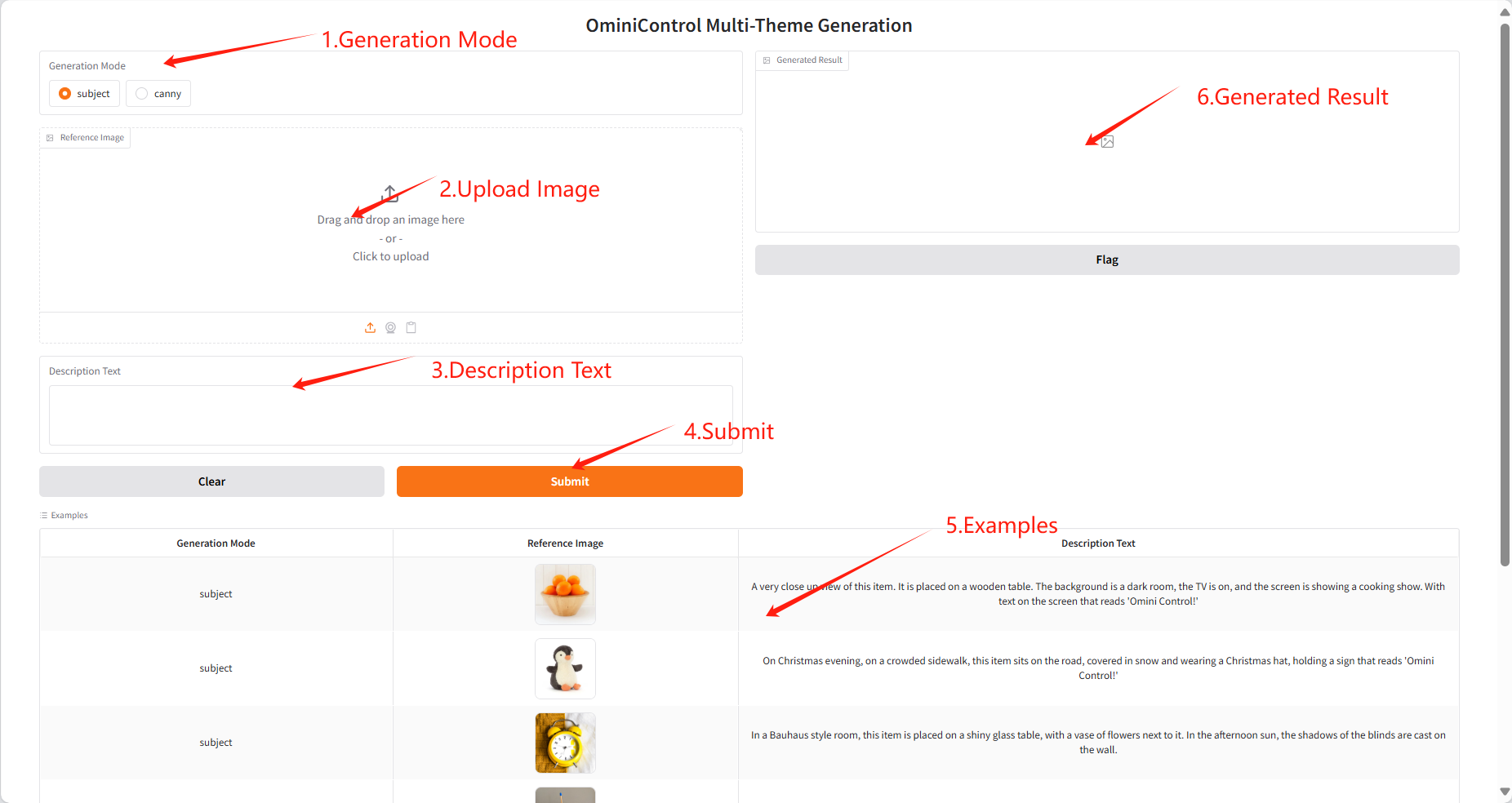

2. After entering the webpage, you can experience the theme-driven generation (Subject) and spatial control (Spatial)

Note: It takes about 30 to 70 seconds to switch between the two models, please be patient.

Theme driven generation: The user can complete the theme-driven generation by providing a picture of an object and a text description of the target scene where the object is located.

Space Control: It includes operations such as image restoration and Canny. The user provides a picture of an object and a text description of the changes to the object to complete the spatial control of the image.

Theme-driven generation effect (Subject)

Spatial Control - Image Restoration Effect (Spatial)

Citation Information

Thanks to GitHub user SuperYang For the production of this tutorial, the project reference information is as follows:

@article{tan2024ominicontrol,

title={Ominicontrol: Minimal and universal control for diffusion transformer},

author={Tan, Zhenxiong and Liu, Songhua and Yang, Xingyi and Xue, Qiaochu and Wang, Xinchao},

journal={arXiv preprint arXiv:2411.15098},

volume={3},

year={2024}

}

Exchange and discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.