Command Palette

Search for a command to run...

Hunyuan3D-2.1: 3D Generative Model Supporting Physical Rendering Textures

Date

Size

1.89 GB

Tags

License

Other

Paper URL

1. Tutorial Introduction

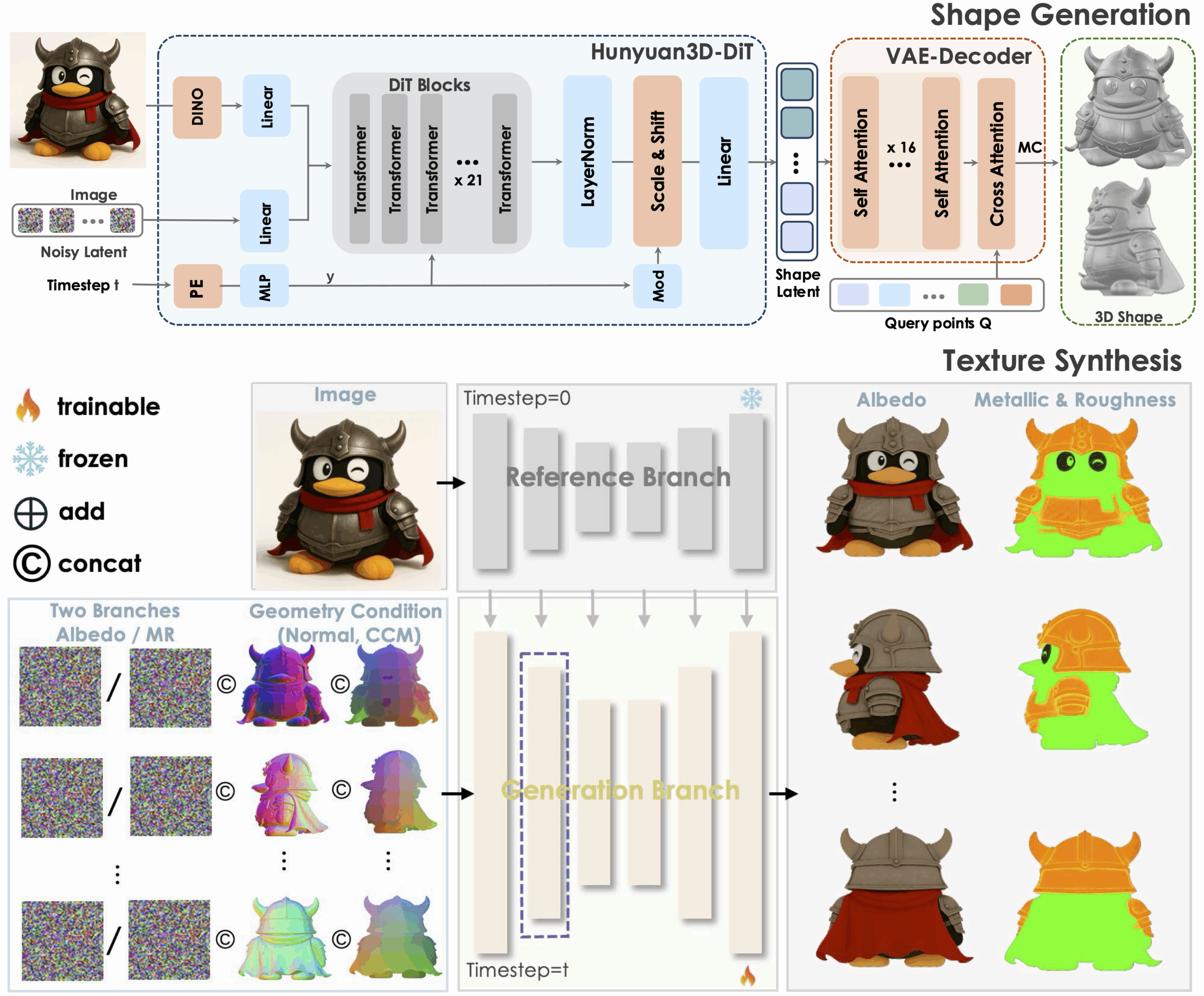

Tencent Hunyuan3D-2.1, released by the Tencent Hunyuan team on June 14, 2025, is an industrial-grade open-source 3D generation model and a scalable 3D asset creation system. It drives the development of cutting-edge 3D generation technology through two key innovations: a fully open-source framework and physically-based rendering (PBR) texture synthesis. It is the first material generation model to support physically-based rendering, featuring a complete material system based on physical laws, including diffuse reflection, metallicity, and normal mapping, achieving cinematic-level lighting and shadow interactions for materials such as leather and bronze, meeting the production-grade precision requirements of game assets and industrial design. Simultaneously, it fully opens up data processing, training and inference code, model weights, and architecture, supporting community developers to fine-tune downstream tasks, providing a reproducible baseline for academic research, and reducing repetitive development costs for industrial applications. Related research papers are available. Hunyuan3D 2.0: Scaling Diffusion Models for High Resolution Textured 3D Assets GenerationIt has been accepted by CVPR 2025.

The computing resources used in this tutorial are a single RTX A6000 card.

2. Effect display

3. Operation steps

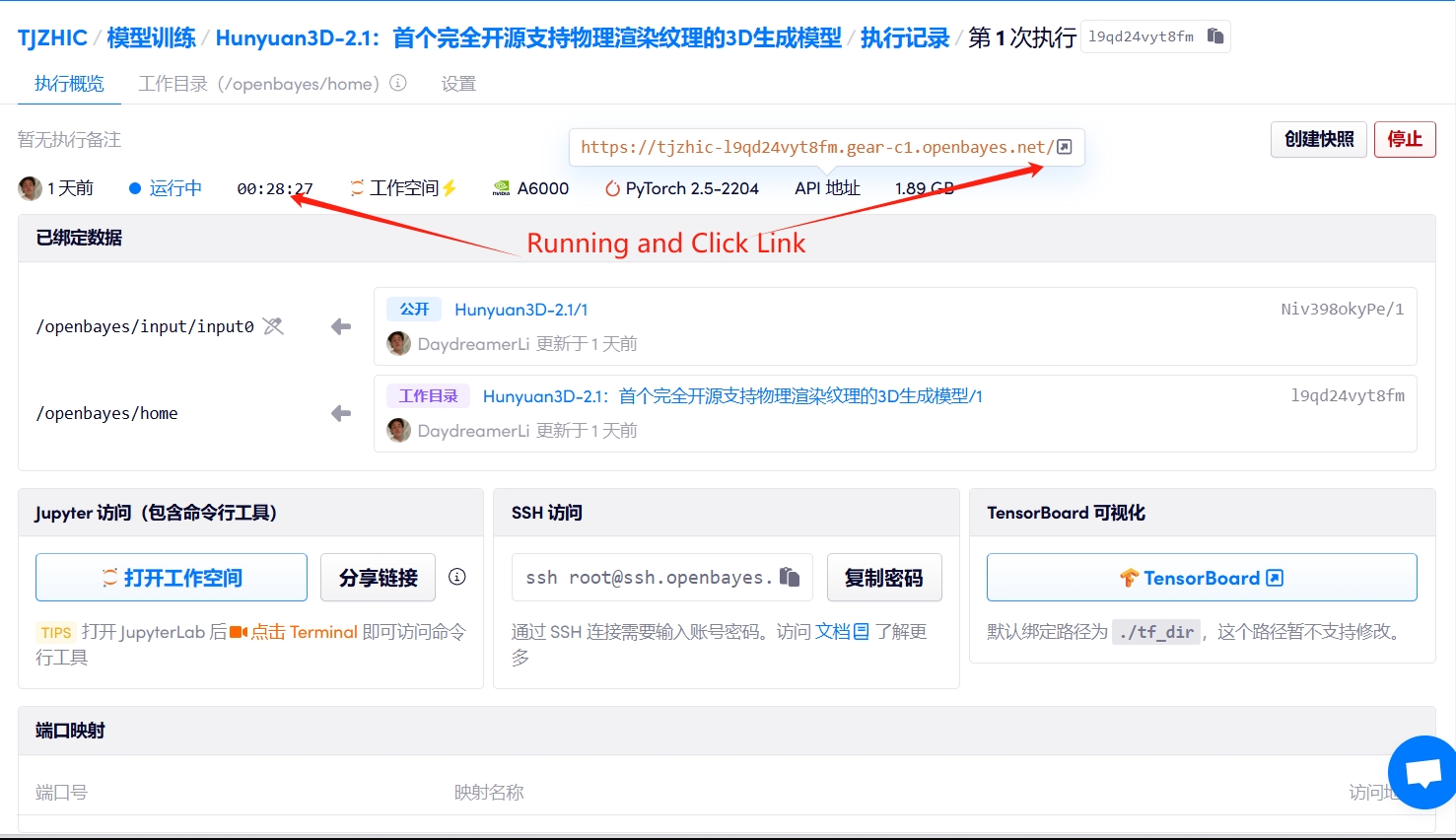

1. Start the container

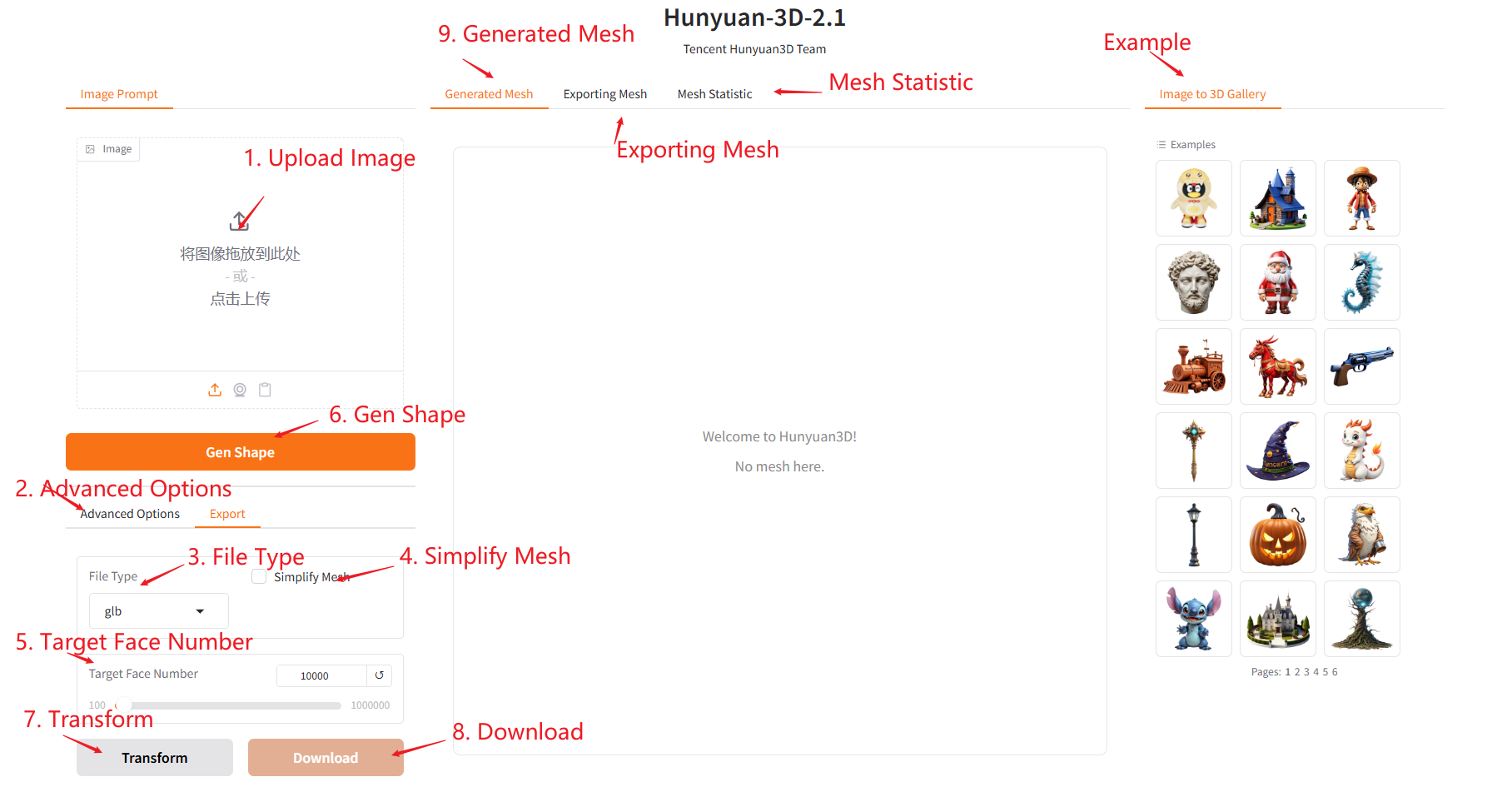

2. Usage steps

If "Bad Gateway" is displayed, it means the model is initializing. Since the model is large, please wait about 3-4 minutes and refresh the page.

Specific parameters:

- Target Face Number: Specifies the maximum number of triangle faces to generate the 3D model.

- Remove Background: Whether to remove the background, automatically detect and clear the image background (such as solid color, cluttered scenes), and only keep the outline of the main object.

- Seed: Controls the randomness of the generation process.

- Inference Steps: Controls the number of sampling iterations of the Diffusion Model.

- Octree Resolution: Defines the grid accuracy level for 3D space segmentation.

- Guidance Scale: Controls the strength of the constraints imposed by the text description on the generated results.

- Number of Chunks: Split large 3D rendering tasks into sub-chunks that are processed in parallel to avoid video memory overflow (OOM).

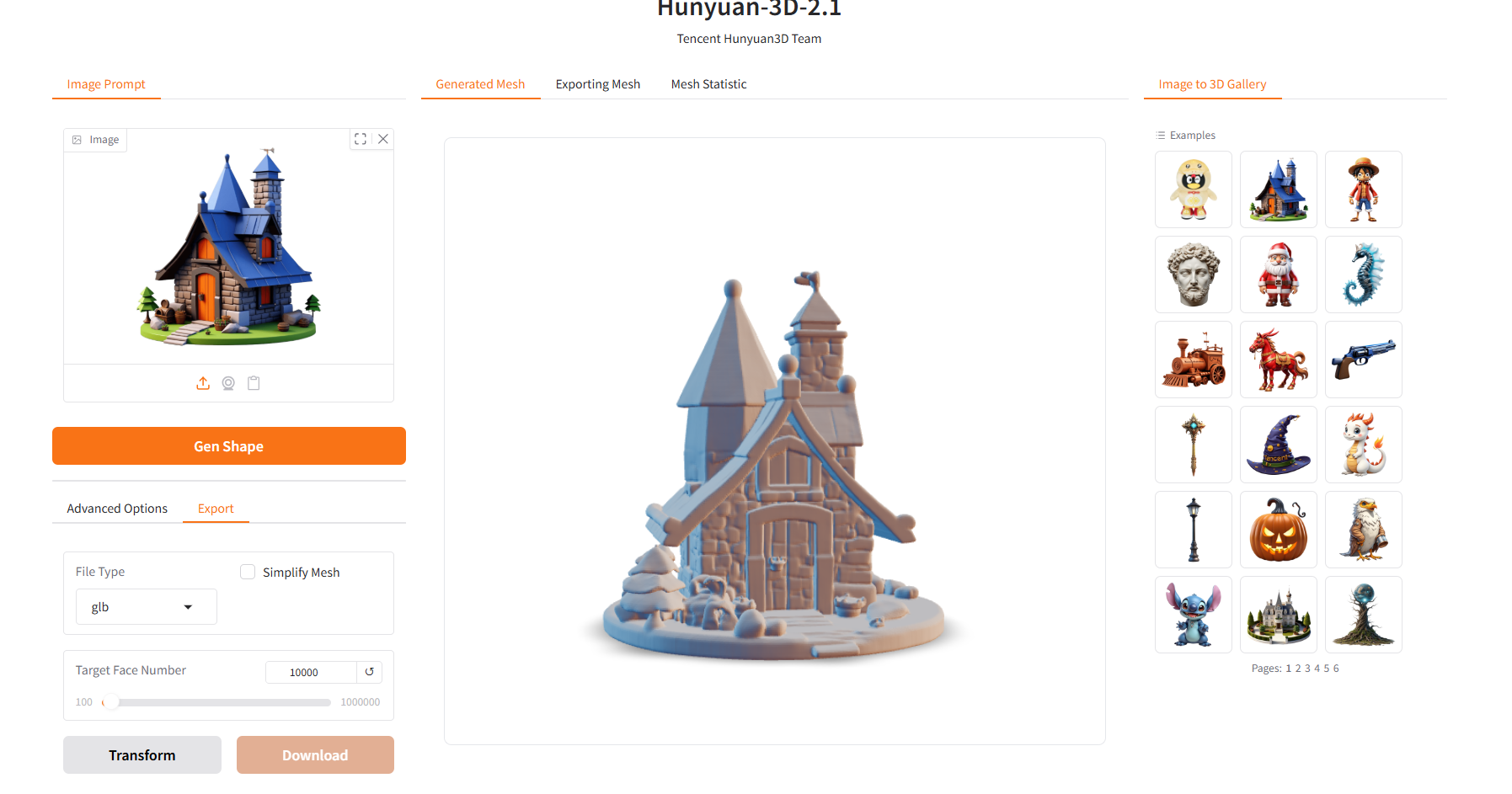

result

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

The citation information for this project is as follows:

@misc{hunyuan3d22025tencent, title={Hunyuan3D 2.0: Scaling Diffusion Models for High Resolution Textured 3D Assets Generation}, author={Tencent Hunyuan3D Team}, year={2025}, eprint={2501.12202}, archivePrefix={arXiv}, primaryClass={cs.CV} }@misc{yang2024hunyuan3d,

title={Hunyuan3D 1.0: A Unified Framework for Text-to-3D and Image-to-3D Generation},

author={Tencent Hunyuan3D Team},

year={2024},

eprint={2411.02293},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.