Command Palette

Search for a command to run...

RFUAV: A Benchmark Dataset for Unmanned Aerial Vehicle Detection and Identification

RFUAV: A Benchmark Dataset for Unmanned Aerial Vehicle Detection and Identification

Rui Shi Xiaodong Yu Shengming Wang Yijia Zhang Lu Xu Peng Pan Chunlai Ma

Abstract

In this paper, we propose RFUAV as a new benchmark dataset for radio-frequency based (RF-based) unmanned aerial vehicle (UAV) identification and address the following challenges: Firstly, many existing datasets feature a restricted variety of drone types and insufficient volumes of raw data, which fail to meet the demands of practical applications. Secondly, existing datasets often lack raw data covering a broad range of signal-to-noise ratios (SNR), or do not provide tools for transforming raw data to different SNR levels. This limitation undermines the validity of model training and evaluation. Lastly, many existing datasets do not offer open-access evaluation tools, leading to a lack of unified evaluation standards in current research within this field. RFUAV comprises approximately 1.3 TB of raw frequency data collected from 37 distinct UAVs using the Universal Software Radio Peripheral (USRP) device in real-world environments. Through in-depth analysis of the RF data in RFUAV, we define a drone feature sequence called RF drone fingerprint, which aids in distinguishing drone signals. In addition to the dataset, RFUAV provides a baseline preprocessing method and model evaluation tools. Rigorous experiments demonstrate that these preprocessing methods achieve state-of-the-art (SOTA) performance using the provided evaluation tools. The RFUAV dataset and baseline implementation are publicly available at https://github.com/kitoweeknd/RFUAV/.

One-sentence Summary

The authors from Zhejiang Sci-Tech University, Hangzhou Dianzi University, and the National University of Defense Technology introduce RFUAV, a 1.3 TB benchmark dataset comprising raw RF signals from 37 UAV types collected via USRP devices, which enables the identification of drone-specific RF fingerprints through spectrogram-based deep learning; their two-stage model, leveraging ViT and YOLO with optimized STFT and "Hot" color mapping, achieves state-of-the-art performance in low-SNR conditions, advancing robust UAV detection for real-world security applications.

Key Contributions

-

This paper introduces RFUAV, a comprehensive benchmark dataset for unmanned aerial vehicle (UAV) detection and identification, collected from civilian drones operating in industrial, scientific, and medical (ISM) bands (985 MHz, 2.4 GHz, 5.8 GHz), leveraging the continuous RF communication between drones and their controllers to establish unique radio-frequency fingerprints.

-

The authors propose a two-stage deep learning framework that first uses YOLO for detecting drone signals in spectrograms generated via Short-Time Fourier Transform (STFT), followed by a ResNet-based classifier to identify drone types, with an efficient dual-buffer queue and FFT-based preprocessing enabling real-time, high-accuracy detection.

-

Experiments on RFUAV demonstrate the model’s robustness across varying signal-to-noise ratios (SNR from -20 to 20 dB), with performance evaluated using spectrograms derived from STFT with varying transform points (32 to 1024), and the dataset is designed for extensibility, supporting integration with other datasets like DroneRFa and DroneRF to cover over 54 drone models.

Introduction

The authors leverage the growing deployment of unmanned aerial vehicles (UAVs) in smart cities to address critical security and privacy concerns arising from intelligent autonomous systems. While various detection methods exist—vision, acoustic, radar, and RF-based—RF-based detection stands out for its robustness and cost-effectiveness, making it ideal for real-world applications. Prior approaches, however, face limitations in accuracy under low signal-to-noise ratio (SNR) conditions and lack comprehensive coverage of diverse commercial drone models. The authors’ main contribution is the development of RFUAV, a dataset and deep learning framework that enables high-accuracy UAV detection through advanced signal preprocessing and model architecture. By integrating data from multiple sources like DroneRFa and DroneRF, RFUAV expands coverage to over 54 drone types, ensuring broad representativeness. The authors also emphasize ongoing updates to incorporate new drone models, positioning RFUAV as a dynamic, evolving resource for advancing RF-based detection and deep learning in the radio-frequency domain.

Dataset

- The RFUAV dataset comprises approximately 1.3 TB of raw radio frequency (RF) data collected from 37 distinct UAV models using Universal Software Radio Peripheral (USRP) X310 devices in real-world environments.

- Data was captured under high signal-to-noise ratio (SNR) conditions, with each drone type contributing a minimum of 15 GB of raw IQ samples, and some models (e.g., DJI FPV COMBO, DJI MINI 3) including multiple bandwidth configurations to ensure comprehensive coverage.

- Raw data is stored in interleaved IQ format with 32-bit floating-point precision, and acquisition parameters are documented in accompanying XML files for full reproducibility.

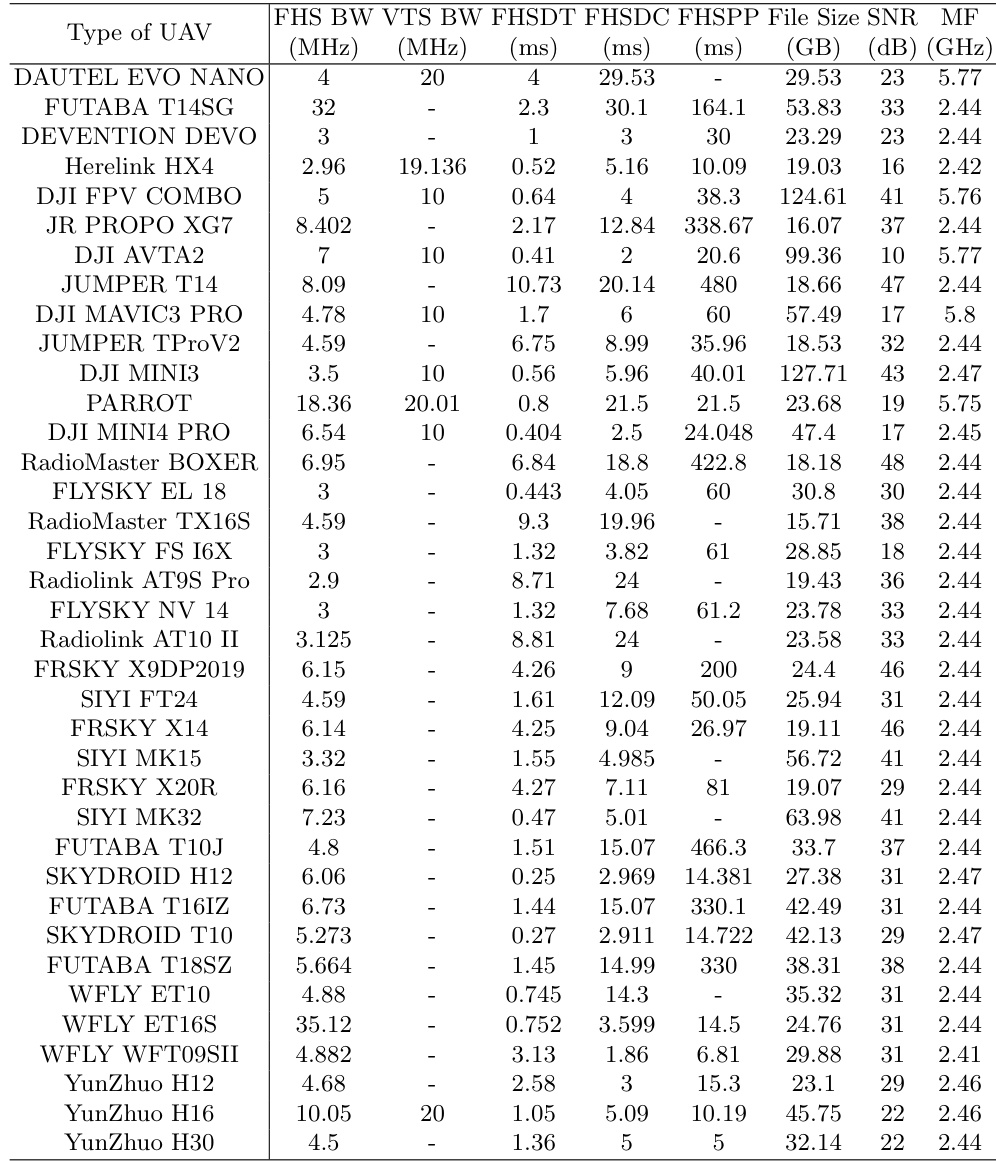

- The dataset includes both frequency-hopping spread spectrum (FHSS) signals used for remote control and fixed-bandwidth signals for video transmission, with detailed metadata on key signal characteristics such as hopping bandwidth (FHSBW), duration (FHSDT), duty cycle (FHSDC), periodicity (FHSPP), and video transmission bandwidth (VTSBW).

- A unique RF drone fingerprint is derived from these signal features, serving as a discriminative input for deep learning models to identify drone types.

- The authors use the dataset to train and evaluate a two-stage model for drone detection and identification, leveraging architectures like ViT, SwinTransformer, YOLO, and ResNet.

- Training employs a mixture of data from all 37 drone types, with balanced sampling across operational phases—including pairing and flight—to ensure diverse signal representations.

- A preprocessing pipeline is provided that includes SNR estimation, 3D spectrogram generation, and signal decomposition, enabling transformation of raw data to different SNR levels for robust model evaluation.

- The dataset supports real-time playback and quality verification via a GNU Radio-based signal playback module connected to a spectrum analyzer, ensuring signal integrity.

- The authors implement a systematic data collection workflow involving manual signal discovery using spectrograms, dynamic center frequency tuning, and adaptive gain control to maintain high SNR across all recordings.

- For drones with variable video transmission bandwidths (e.g., certain DJI models), multiple acquisition sessions are conducted to capture all possible configurations, ensuring full coverage of operational scenarios.

- The dataset is publicly available with full preprocessing code and evaluation tools on GitHub, enabling standardized benchmarking and reproducible research in RF-based drone detection.

Method

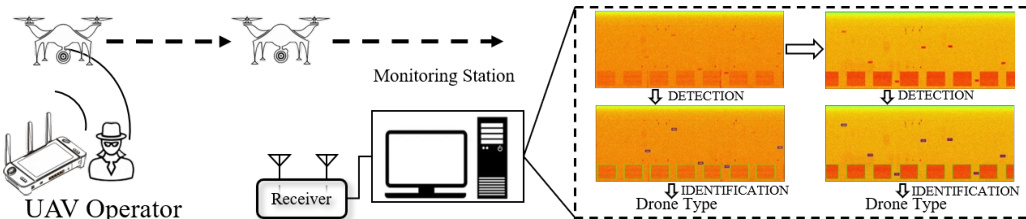

The authors leverage a two-stage deep learning framework for RF-based drone detection and identification, designed to exploit the continuous communication between drones and their operators. The system operates by first capturing raw radio frequency (RF) signals within the industrial, scientific, and medical (ISM) bands—specifically 985 MHz, 2.4 GHz, and 5.8 GHz—using a dedicated receiver. These signals are then processed to generate spectrograms, which serve as the primary input for the detection and classification models. The transformation from raw time-domain signals to spectrograms is achieved through the Short-Time Fourier Transform (STFT), a method that visualizes the energy distribution of the signal across time and frequency. This representation enables the extraction of spectral features that are characteristic of different drone types.

Refer to the framework diagram to understand the overall workflow. The process begins with signal acquisition at a monitoring station, where a receiver captures the RF emissions from a UAV and its operator. The collected data is then preprocessed to generate spectrograms, which are fed into a two-stage model. The first stage employs an object detection network, specifically YOLO, to identify the presence of drone signals within the spectrogram by detecting signal targets. This stage outputs bounding boxes around regions containing drone signals. The regions of interest identified by the first stage are then passed to the second stage, which uses an image classification network, ResNet, to classify the detected signals into specific drone types. This modular design allows for efficient and accurate detection and identification, leveraging the strengths of both object detection and image classification architectures.

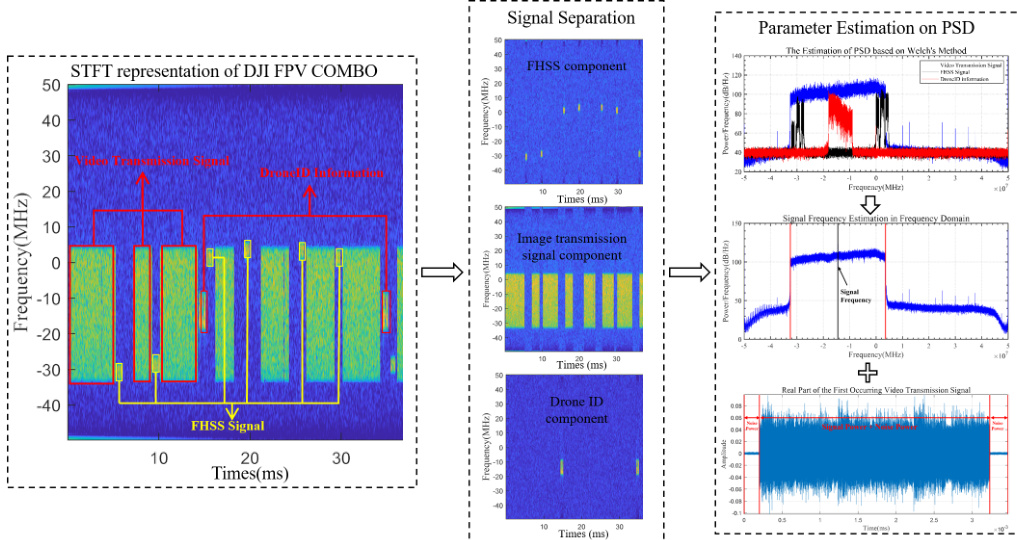

As shown in the figure below, the system also supports advanced signal processing tasks such as signal separation and parameter estimation. The process begins with the STFT representation of a raw RF signal, such as the DJI FPV COMBO signal, which reveals distinct components in the time-frequency domain. These components include a downlink video transmission signal modulated by orthogonal frequency division multiplexing (OFDM), an uplink control signal using frequency-hopping spread spectrum (FHSS), and a data frame containing DroneID information. Although these signals overlap in frequency, they are temporally separated, allowing for their isolation. A dual sliding window-based signal detection algorithm is applied in the time domain to identify the start and end positions of each signal clip. Once separated, Welch's method is used to estimate the power spectral density (PSD) of each component. The center frequency of a signal is determined by scanning a sliding window across the PSD and identifying the frequency at which the energy within the window is maximized. Finally, the signal-to-noise ratio (SNR) is estimated in the time domain by calculating the power of the signal and the noise separately and applying the standard SNR formula. This detailed analysis provides a comprehensive understanding of the RF characteristics of the captured signals, enabling precise parameter estimation.

Experiment

- Conducted experiments to evaluate the impact of STFT points (STFTP) and color map (CMAP) methods on UAV identification accuracy under varying SNRs.

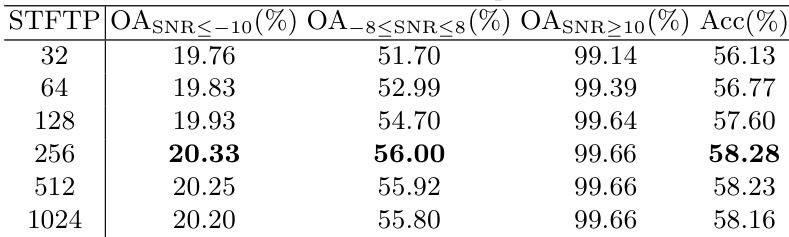

- Found that increasing STFTP improves frequency resolution, with STFTP = 512 (195.3125 kHz resolution) enabling clear separation of image transmission and FHSS signals, while excessively high STFTP (e.g., 65536, 524288) degrades time resolution, blurring temporal features.

- Identified optimal STFTP around 256–512 for balancing time and frequency resolution, avoiding both loss of detail and signal merging.

- Evaluated four CMAP methods (Autumn, Hot, HSV, Parula) across SNR levels from 20 dB to -20 dB; "HSV" provided the highest contrast, followed by "Hot", "Autumn", and "Parula".

- On the DJI FPV COMBO dataset, the "HSV" CMAP achieved the best visual distinction of drone signals at low SNR, with clear signal boundaries down to -10 dB, while "Parula" showed the weakest contrast and poorest generalization.

- Demonstrated that spectrograms from different UAVs exhibit distinct visual patterns, enabling effective identification even under noisy conditions.

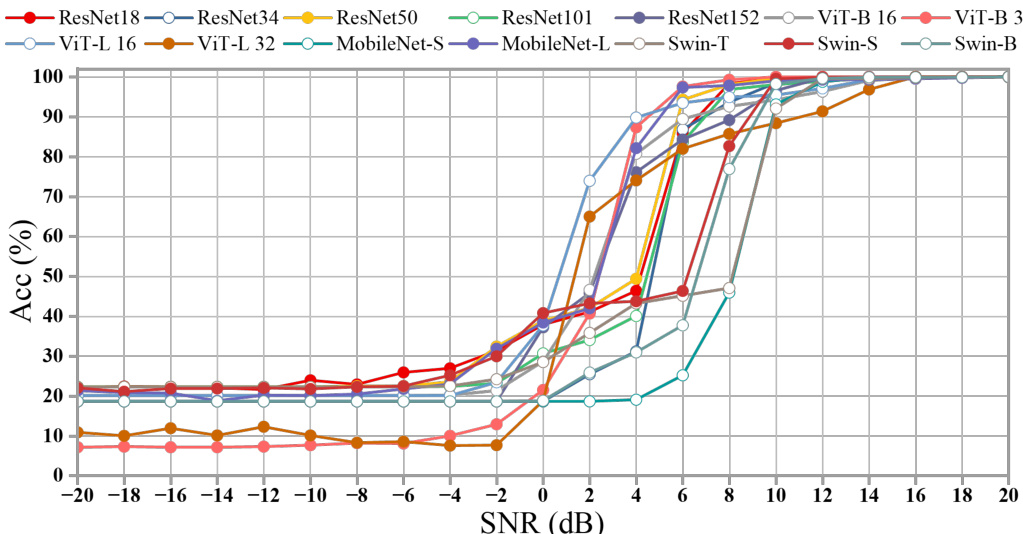

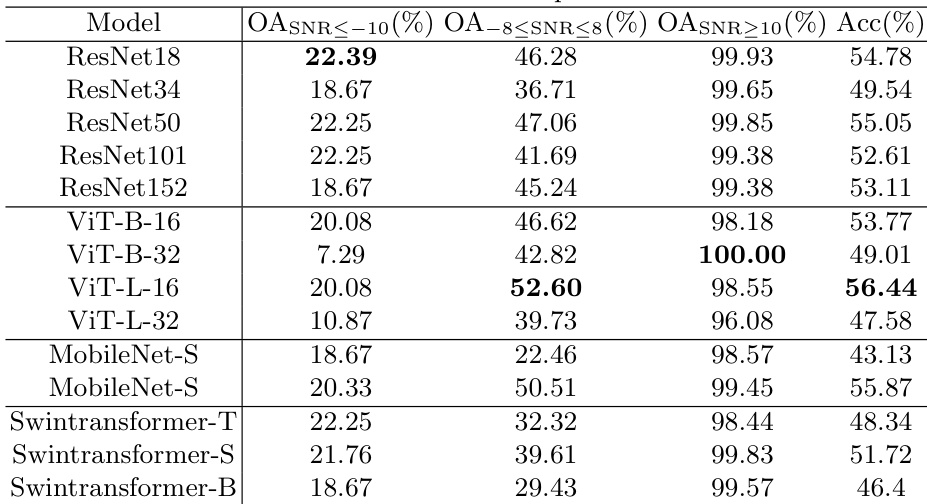

The authors use a range of deep learning models to evaluate their performance on UAV identification tasks under varying signal-to-noise ratios (SNRs). Results show that model accuracy increases with SNR, with ResNet and ViT variants achieving over 90% accuracy at SNR levels above 8 dB, while MobileNet and Swin models exhibit lower performance across all SNR conditions.

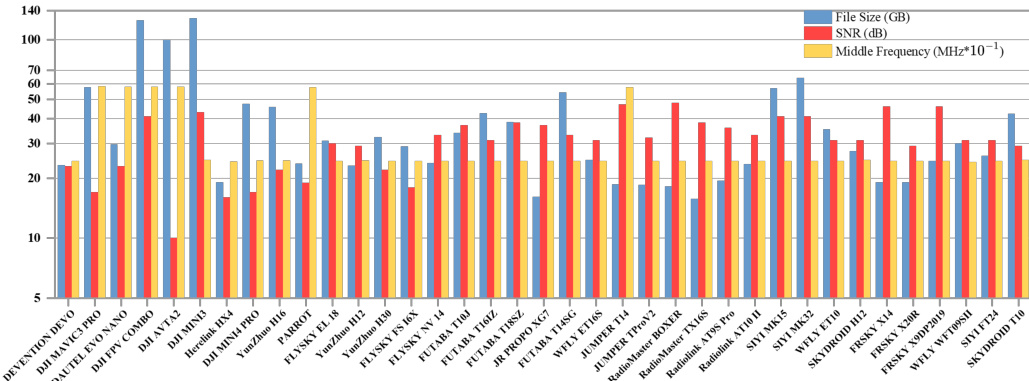

The authors use a bar chart to compare the file sizes, SNR values, and middle frequencies of various UAVs based on their signal characteristics. Results show that the DJI MINI3 and DJI AVATA2 have the largest file sizes, while the SNR and middle frequency values vary significantly across different UAV models, with some models like the DJI MINI3 and DJI AVATA2 exhibiting higher SNR and middle frequency values compared to others.

The authors use different deep learning models to evaluate their performance on UAV identification tasks under varying SNR conditions, with results showing that ViT-L-32 achieves the highest overall accuracy of 56.44% and a perfect OA at SNR ≥ 10% of 100.00%. ResNet18 performs best at low SNR (≤ -10%) with an OA of 22.39%, while ResNet34 and ResNet50 show similar performance across all SNR levels, indicating that model architecture significantly influences robustness to noise.

The authors use different STFTP values to analyze their impact on spectrogram quality and deep learning model accuracy for UAV identification. Results show that increasing STFTP improves frequency resolution and signal clarity, with 256 providing the best overall accuracy across all SNR conditions, while higher values like 1024 degrade performance due to reduced time resolution.

The authors use a table to present the performance metrics of various UAVs under different signal processing parameters, including frequency bandwidth, time delay, and file size. Results show that the choice of color map and signal-to-noise ratio significantly affects the visibility and distinguishability of UAV signals in spectrograms, with the "HSV" method providing the highest contrast and thus the clearest signal representation.