Command Palette

Search for a command to run...

Memory in the Age of AI Agents

Memory in the Age of AI Agents

Abstract

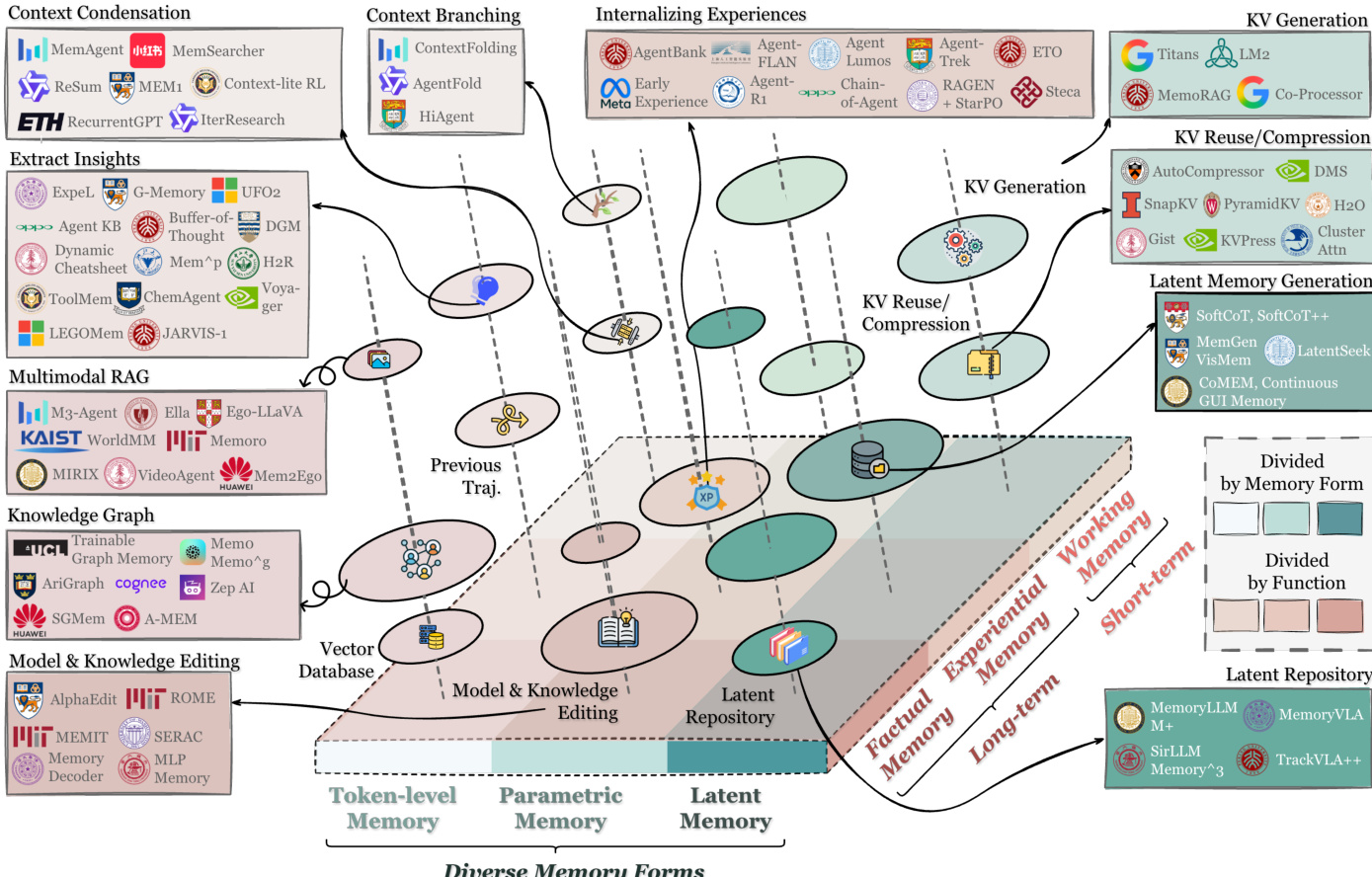

Memory has emerged, and will continue to remain, a core capability of foundation model-based agents. As research on agent memory rapidly expands and attracts unprecedented attention, the field has also become increasingly fragmented. Existing works that fall under the umbrella of agent memory often differ substantially in their motivations, implementations, and evaluation protocols, while the proliferation of loosely defined memory terminologies has further obscured conceptual clarity. Traditional taxonomies such as long/short-term memory have proven insufficient to capture the diversity of contemporary agent memory systems. This work aims to provide an up-to-date landscape of current agent memory research. We begin by clearly delineating the scope of agent memory and distinguishing it from related concepts such as LLM memory, retrieval augmented generation (RAG), and context engineering. We then examine agent memory through the unified lenses of forms, functions, and dynamics. From the perspective of forms, we identify three dominant realizations of agent memory, namely token-level, parametric, and latent memory. From the perspective of functions, we propose a finer-grained taxonomy that distinguishes factual, experiential, and working memory. From the perspective of dynamics, we analyze how memory is formed, evolved, and retrieved over time. To support practical development, we compile a comprehensive summary of memory benchmarks and open-source frameworks. Beyond consolidation, we articulate a forward-looking perspective on emerging research frontiers, including memory automation, reinforcement learning integration, multimodal memory, multi-agent memory, and trustworthiness issues. We hope this survey serves not only as a reference for existing work, but also as a conceptual foundation for rethinking memory as a first-class primitive in the design of future agentic intelligence.

One-sentence Summary

Researchers from National University of Singapore, Renmin University of China, Fudan University, Peking University, and collaborating institutions present a comprehensive "forms-functions-dynamics" taxonomy for agent memory, identifying token-level/parametric/latent memory forms, factual/experiential/working memory functions, and formation/evolution/retrieval dynamics to advance persistent, adaptive capabilities in LLM-based agents beyond traditional long/short-term memory distinctions.

Key Contributions

- The paper addresses the growing fragmentation in AI agent memory research, where inconsistent terminology and outdated taxonomies like long/short-term memory fail to capture the diversity of modern systems, hindering conceptual clarity and progress.

- It introduces a unified three-dimensional taxonomy organizing agent memory by forms (token-level, parametric, latent), functions (factual, experiential, working memory), and dynamics (formation, evolution, retrieval), moving beyond coarse temporal categorizations.

- Supporting this framework, the survey compiles representative benchmarks and open-source memory frameworks while mapping existing systems into the taxonomy through Figure 1, and identifies emerging frontiers like multimodal memory and reinforcement learning integration.

Introduction

The authors highlight that memory has become a cornerstone capability for foundation model-based AI agents, enabling long-horizon reasoning, continual adaptation, and effective interaction with complex environments. As agents evolve beyond static language models into interactive systems for applications like personalized chatbots, recommender systems, and financial investigations, robust memory mechanisms are essential to transform fixed-parameter models into adaptive systems that learn from environmental interactions. Prior work faces significant fragmentation with inconsistent terminology, divergent implementations, and insufficient taxonomies—traditional distinctions like long/short-term memory fail to capture contemporary systems' complexity while overlapping concepts like LLM memory, RAG, and context engineering create conceptual ambiguity. To address these challenges, the authors establish a comprehensive "forms-functions-dynamics" framework that categorizes memory into three architectural forms (token-level, parametric, and latent), three functional roles (factual, experiential, and working memory), and detailed operational dynamics covering memory formation, retrieval, and evolution. This unified taxonomy clarifies conceptual boundaries, reconciles fragmented research, and provides structured analysis of benchmarks, frameworks, and emerging frontiers including reinforcement learning integration, multimodal memory, and trustworthy memory systems.

Dataset

The authors survey two primary categories of evaluation benchmarks for assessing LLM agent memory and long-term capabilities:

-

Memory/Lifelong/Self-Evolving Agent Benchmarks

- Composition: Explicitly designed for memory retention, lifelong learning, or self-improvement (e.g., MemBench, LoCoMo, LongMemEval).

- Key details:

- Focus on factual/experiential memory, multimodal inputs, and simulated/real environments.

- Sizes range from hundreds to thousands of samples/tasks (e.g., MemBench for user modeling, LongMemEval tracking catastrophic forgetting).

- Filtering emphasizes controlled memory retention, preference tracking, or multi-episode adaptation.

- Usage: Evaluated via Table 8, which categorizes benchmarks by memory focus, modality, and scale (e.g., LoCoMo tests preference consistency; LifelongAgentBench measures forward/backward transfer).

-

Other Related Benchmarks

- Composition: Originally for tool use, embodiment, or reasoning but stress long-horizon memory (e.g., WebShop, ALFWorld, SWE-Bench Verified).

- Key details:

- Embodied (ALFWorld), web-based (WebArena), or multi-task (AgentGym) setups.

- Implicitly test context retention across sequential actions (e.g., WebShop requires recalling prior navigation steps).

- Scales vary: WebArena uses task-based evaluation; GAIA assesses multi-step research.

- Usage: Table 9 compares frameworks supporting these benchmarks, noting memory types (factual/experiential), multimodality, and internal structures (e.g., MemoryBank for episodic knowledge consolidation).

The paper uses these benchmarks solely for evaluation—not training—to measure long-context retention, state tracking, and adaptation. No data processing (e.g., cropping) is applied; instead, benchmarks are analyzed via structured feature comparisons in Tables 8–9, highlighting memory mechanisms like self-reflection (Evo-Memory) or tool-augmented storage (MemoryAgentBench).

Method

The authors leverage a comprehensive, multi-faceted framework for LLM-based agent memory systems, which integrates distinct memory forms, functional roles, and dynamic lifecycle processes to enable persistent, adaptive, and goal-directed behavior. The overall architecture is not monolithic but rather a layered ecosystem where token-level, parametric, and latent memory coexist and interact, each serving complementary purposes based on the task’s demands for interpretability, efficiency, or performance.

At the core of the agent loop, each agent i∈I observes the environment state st and receives an observation o˙ti=Oi(st,hti,Q), where hti represents the agent’s accessible interaction history and Q is the fixed task specification. The agent then executes an action at=πi(Oti,mti,Q), where mti is a memory-derived signal retrieved from the evolving memory state Mt∈M. This memory state is not a static buffer but a dynamic knowledge base that undergoes continuous formation, evolution, and retrieval, forming a closed-loop cognitive cycle.

The memory system’s architecture is structured around three primary forms, each with distinct representational properties and operational characteristics. Token-level memory, as depicted in the taxonomy, organizes information as explicit, discrete units that can be individually accessed and modified. It is further categorized into flat (1D), planar (2D), and hierarchical (3D) topologies. Flat memory stores information as linear sequences or independent clusters, suitable for simple chunking or dialogue logs. Planar memory introduces explicit relational structures such as graphs or trees within a single layer, enabling richer semantic associations and structured retrieval. Hierarchical memory extends this by organizing information across multiple abstraction layers, supporting coarse-to-fine navigation and cross-layer reasoning, as seen in pyramid or multi-layer architectures.

Parametric memory, in contrast, stores information directly within the model’s parameters, either by internalizing knowledge into the base weights or by attaching external parameter modules like adapters or LoRA. This form is implicit and abstract, offering performance gains through direct integration into the model’s forward pass but at the cost of slower updates and potential catastrophic forgetting. Latent memory operates within the model’s internal representational space, encoding experiences as continuous embeddings, KV caches, or hidden states. It is human-unreadable but machine-native, enabling efficient, multimodal fusion and low-latency inference, though it sacrifices transparency and editability.

The functional architecture of the memory system is organized around three pillars: factual, experiential, and working memory. Factual memory serves as a persistent declarative knowledge base, ensuring consistency with user preferences and environmental states. Experiential memory encapsulates procedural knowledge, distilling strategies and skills from past trajectories to enable continual learning. Working memory provides a dynamic, bounded workspace for active context management during a single task or session, addressing both single-turn input condensation and multi-turn state maintenance.

The operational dynamics of the memory system are governed by a cyclical lifecycle of formation, evolution, and retrieval. Memory formation transforms raw experiences into information-dense knowledge units through semantic summarization, knowledge distillation, structured construction, latent representation, or parametric internalization. Memory evolution then integrates these new units into the existing repository through consolidation, updating, and forgetting mechanisms, ensuring coherence, accuracy, and efficiency. Finally, memory retrieval executes context-aware queries to access relevant knowledge at the right moment, involving timing, query construction, retrieval strategies, and post-processing to deliver concise, coherent context to the LLM policy.

This entire framework is designed to be flexible and composable. Different agents may instantiate different subsets of these operations at varying temporal frequencies, giving rise to memory systems that range from passive buffers to actively evolving knowledge bases. The authors emphasize that the choice of memory type and mechanism is not arbitrary but reflects the designer’s intent for how the agent should behave in a given task, balancing trade-offs between interpretability, efficiency, and performance. The architecture thus supports a wide spectrum of applications, from multi-turn chatbots and personalized agents to reasoning-intensive tasks and multimodal, low-resource settings.

Experiment

- Comparative analysis of open-source memory frameworks for LLM agents validates support for factual memory (vector/structured stores) and growing integration of experiential traces (dialogue histories, episodic summaries) and multimodal memory

- Frameworks span agent-centric systems with hierarchical memory (e.g., MemGPT, MemoryOS) to general-purpose backends (e.g., Pinecone, Chroma), with many implementing short/long-term separation and graph/profile-based memory spaces

- While some frameworks report initial results on memory benchmarks, most focus on providing scalable databases and APIs without standardized agent behavior evaluation protocols