Command Palette

Search for a command to run...

SlideTailor: Personalized Presentation Slide Generation for Scientific Papers

SlideTailor: Personalized Presentation Slide Generation for Scientific Papers

Wenzheng Zeng Mingyu Ouyang Langyuan Cui Hwee Tou Ng

Abstract

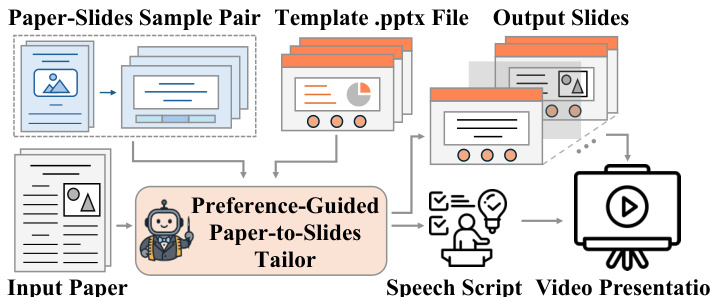

Automatic presentation slide generation can greatly streamline content creation. However, since preferences of each user may vary, existing under-specified formulations often lead to suboptimal results that fail to align with individual user needs. We introduce a novel task that conditions paper-to-slides generation on user-specified preferences. We propose a human behavior-inspired agentic framework, SlideTailor, that progressively generates editable slides in a user-aligned manner. Instead of requiring users to write their preferences in detailed textual form, our system only asks for a paper-slides example pair and a visual template - natural and easy-to-provide artifacts that implicitly encode rich user preferences across content and visual style. Despite the implicit and unlabeled nature of these inputs, our framework effectively distills and generalizes the preferences to guide customized slide generation. We also introduce a novel chain-of-speech mechanism to align slide content with planned oral narration. Such a design significantly enhances the quality of generated slides and enables downstream applications like video presentations. To support this new task, we construct a benchmark dataset that captures diverse user preferences, with carefully designed interpretable metrics for robust evaluation. Extensive experiments demonstrate the effectiveness of our framework.

One-sentence Summary

The authors from the National University of Singapore propose SlideTailor, a human behavior-inspired agentic framework that generates user-aligned, editable slides by inferring preferences from implicit inputs—such as example paper-slide pairs and visual templates—without requiring explicit textual descriptions. The system leverages a chain-of-speech mechanism to synchronize slide content with oral narration, enhancing quality for downstream applications like video presentations. Evaluated on a newly constructed benchmark with interpretable metrics, SlideTailor outperforms prior methods in personalization and usability.

Key Contributions

- Existing paper-to-slides generation methods often fail to align with individual user preferences due to their under-specified, one-size-fits-all nature, leading to suboptimal content and visual design in automated presentations.

- SlideTailor introduces a human behavior-inspired agentic framework that infers rich, multi-dimensional user preferences from implicit inputs—a paper-slides example pair and a .pptx template—enabling personalized, editable slide generation without requiring detailed textual instructions.

- The framework incorporates a novel chain-of-speech mechanism to align slide content with planned oral narration, and is evaluated on a newly constructed benchmark dataset with interpretable metrics, demonstrating superior performance in preference alignment and overall presentation quality.

Introduction

The authors address the challenge of generating personalized presentation slides from scientific papers, a task that traditionally treats slide creation as a one-size-fits-all document-to-slides conversion, ignoring the subjective nature of presentation design. Prior methods either lack user customization or rely on explicit, detailed preference inputs, which are burdensome and unrealistic for most users. To overcome this, the authors introduce SlideTailor, a human behavior-inspired agentic framework that learns user preferences implicitly from a paper-slides example pair and a visual template—natural artifacts that encode both content and aesthetic preferences without requiring explicit instructions. The system distills these implicit signals into a preference profile, then uses a chain-of-speech mechanism to align slide content with planned oral narration, improving coherence and enabling downstream video presentation generation. A key contribution is the construction of a benchmark dataset with diverse, interpretable metrics to evaluate preference alignment and presentation quality, demonstrating that SlideTailor outperforms existing methods in both personalization and overall slide quality.

Dataset

- The dataset, named PSP (Paper-to-Slides with Preferences), is designed to support research on customized paper-to-slides generation with explicit modeling of user preferences.

- It is composed of three core components: 200 scientific papers serving as input targets, 50 high-quality paper-slides pairs reflecting diverse structuring and stylistic preferences, and 10 academic slide templates representing common research-oriented layouts and aesthetics.

- The source papers are manually curated from public proceedings of top-tier AI and scientific venues, including AAAI, ACL, CVPR, NeurIPS, Nature, Science, The Lancet, and Chemical Reviews Letters, covering fields such as AI, machine learning, NLP, computer vision, chemistry, and medicine to ensure broad topical and stylistic diversity.

- The 50 paper-slides pairs are selected to represent a wide range of presenter styles and disciplinary conventions, enabling the modeling of both content structuring and aesthetic presentation preferences.

- The 10 templates capture standard academic slide design patterns, providing a basis for layout customization during generation.

- The dataset enables up to 100,000 unique input combinations (200 papers × 50 pairs × 10 templates), making it the largest existing benchmark for paper-to-slides generation and uniquely supporting open-ended preference modeling.

- In the paper, the authors use the dataset to train and evaluate their model, leveraging the 200 target papers as inputs and combining them with the 50 paper-slides pairs and 10 templates to form a diverse training mixture.

- During training, the model is conditioned on both the input paper and a specified preference signal derived from the paper-slides pairs and templates, allowing it to generate slides tailored to specific structuring and aesthetic preferences.

- No explicit cropping is applied to the source papers or slides; instead, the authors rely on the original content and layout structure from the curated pairs.

- Metadata for each sample includes the source venue, research domain, presentation style, and template identifier, enabling fine-grained analysis and preference-based evaluation.

Method

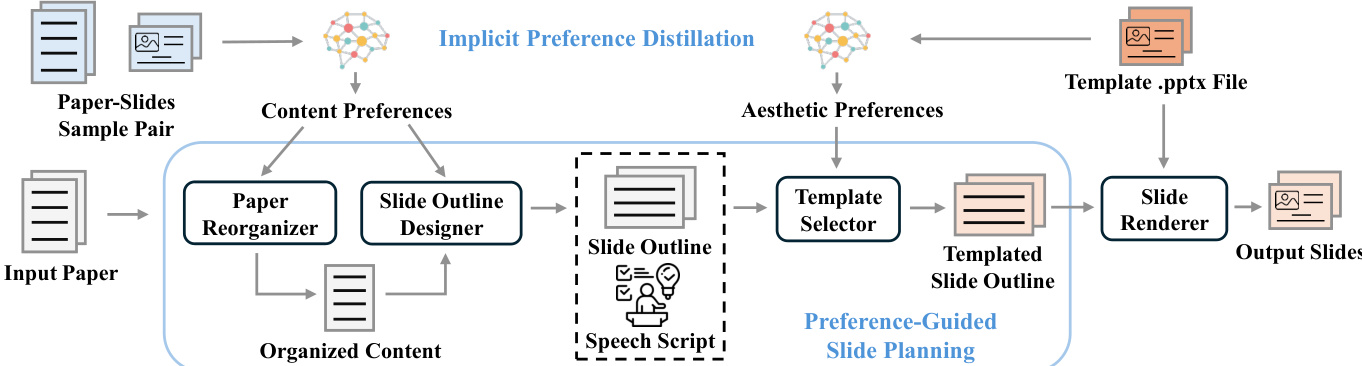

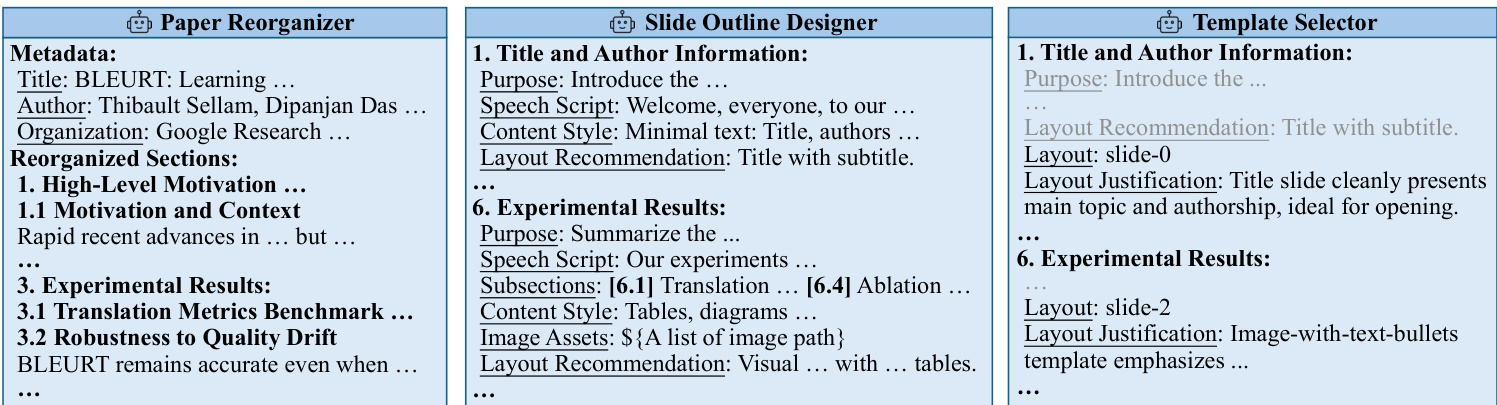

The authors leverage a modular framework, Slide-Tailor, to address the preference-guided paper-to-slides generation task. The overall architecture is designed to synthesize presentation slides from a source paper while adhering to both content and aesthetic preferences provided by the user. The process begins with two distinct user inputs: a paper-slides sample pair, which serves as a reference for content preferences such as narrative flow and emphasis, and a .pptx template file that encodes aesthetic preferences including layout, color, and typography. These inputs are processed through a pipeline that first distills implicit preferences from the sample pair and template, then uses these preferences to guide the generation of a structured slide outline and the final slide realization.

The framework's core operates in two main phases: preference distillation and preference-guided slide planning. In the first phase, implicit preference distillation, the system analyzes the provided paper-slides sample pair to extract content preferences and the template file to extract aesthetic preferences. This is achieved through a series of specialized modules. The Paper Reorganizer processes the input paper and the reference paper-slides pair to produce organized content, capturing the desired structure and emphasis. The Slide Outline Designer then generates a detailed presentation outline, including slide topics, purposes, speech drafts, and content styles, based on the reorganized content and user preferences. This outline is further refined by a speech script generator. Concurrently, the Template Selector uses the aesthetic preferences derived from the template to recommend a suitable layout for each slide in the outline.

The second phase, preference-guided slide planning, integrates the outputs from the first phase. The Slide Renderer takes the templated slide outline and the selected template to generate the final output slides. This is accomplished by a layout-aware agent that maps the planned content elements (e.g., titles, text, visuals) to specific components within the template (e.g., text boxes, image placeholders). This structured mapping may involve modifying, replacing, or inserting elements to ensure coherence. A code agent then generates executable code to apply these edits directly to the .pptx file, preserving the original layout and theme while producing fully editable slides. The framework also supports the generation of a speaker-aware video presentation by composing the generated slides with synthesized narration and talking head videos, ensuring audiovisual synchronization.

The framework's design enables a flexible and user-friendly approach to presentation creation. By separating content and aesthetic preferences into distinct, orthogonal inputs, the system allows for modular adaptation. The use of a template-based editing strategy ensures that the final output maintains the user's desired visual style while being fully editable. The entire process is driven by a series of specialized modules, including the Paper Reorganizer, Slide Outline Designer, and Template Selector, which work in concert to transform a raw paper into a polished, personalized presentation.

Experiment

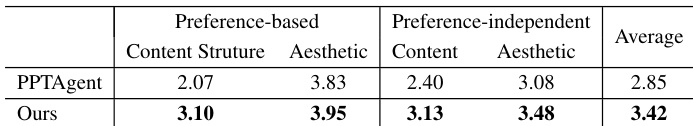

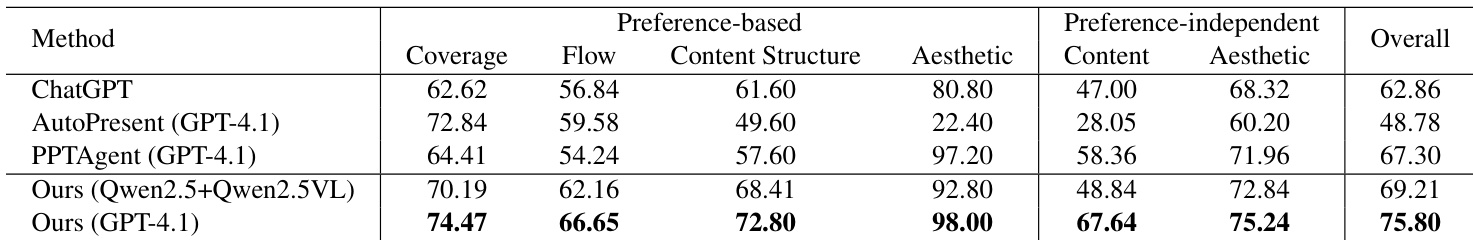

- Evaluated on the PSP dataset using 50 target papers, the proposed SlideTailor framework achieved an overall score of 75.8% on the MLLM-based evaluation, surpassing three state-of-the-art baselines (ChatGPT, AutoPresent, PPTAgent) across both preference-based and preference-independent metrics.

- Demonstrated strong adaptability by achieving competitive performance with open-source models Qwen2.5-72B-Instruct and Qwen2.5-VL-72B-Instruct, confirming robustness across different MLLM backbones without model-specific tuning.

- Ablation studies showed that removing content preference guidance degraded performance by ~10% on key metrics (coverage, flow, content structure), validating the importance of implicit preference distillation; disabling the chain-of-speech mechanism reduced overall quality from 66.4% to 47.3%, highlighting its role in improving content clarity and coherence.

- Human evaluation with 60 independent ratings (4 annotators, 30 cases) showed SlideTailor was preferred in 81.63% of cases over PPTAgent, with strong correlation (Pearson r = 0.64) between human and MLLM-based scores, confirming the validity of the automatic evaluation framework.

- Qualitative analysis confirmed superior alignment with input templates and sample slides in both aesthetic and content-structure aspects, with accurate visual element extraction, proper slide sequencing, and effective integration of text and visuals, outperforming baselines in layout fidelity, visual consistency, and informativeness.

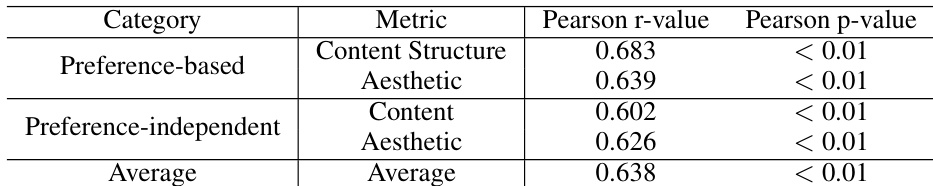

Results show a strong correlation between human ratings and MLLM-based evaluations across both preference-based and preference-independent metrics, with Pearson correlation coefficients ranging from 0.602 to 0.683 and all p-values below 0.01. The average correlation across all metrics is 0.638, indicating that the automatic evaluation framework is highly consistent with human judgments.

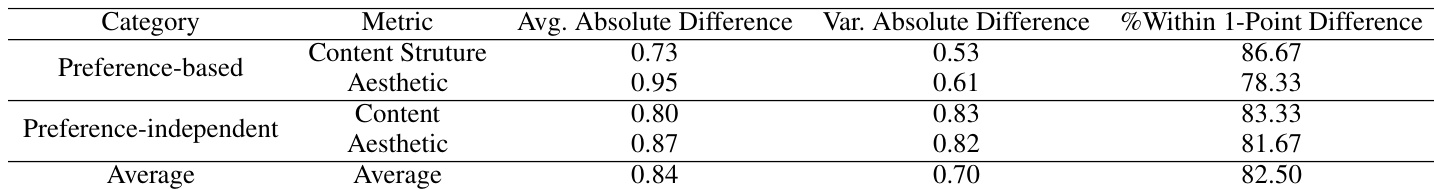

The authors analyze the consistency of human evaluations by computing absolute differences in scores across annotators. Results show that for preference-based metrics, the average absolute difference is 0.73 for content structure and 0.95 for aesthetic, with 86.67% and 78.33% of cases falling within a one-point difference, respectively. For preference-independent metrics, the average absolute differences are 0.80 for content and 0.87 for aesthetic, with 83.33% and 81.67% of cases within a one-point difference, indicating moderate agreement among human evaluators.

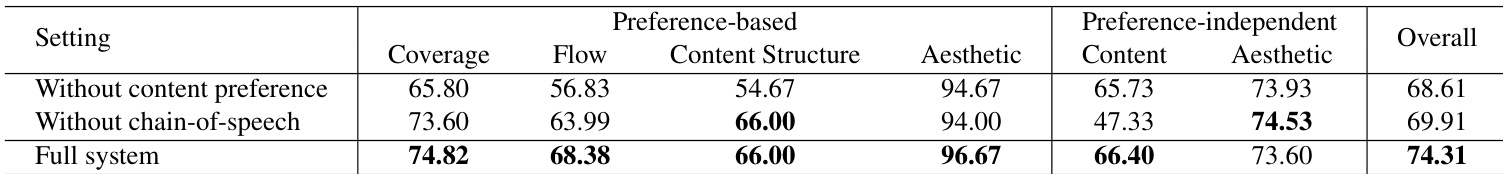

The authors use ablation studies to evaluate the impact of key components in their framework, showing that removing content preference guidance significantly reduces performance across preference-based metrics, particularly coverage, flow, and content structure. Disabling the chain-of-speech mechanism also leads to a substantial drop in overall performance, especially in content quality, highlighting the importance of aligning slide planning with anticipated narration. The full system achieves the highest scores across all metrics, demonstrating the effectiveness of both components.

The authors use a comprehensive set of preference-based and preference-independent metrics to evaluate slide generation methods, with results showing that their framework achieves the highest overall score of 75.8% when using GPT-4.1, outperforming all baselines across both metric categories. The method demonstrates strong alignment with user preferences in content structure and aesthetic design, while also producing high-quality slides in terms of content clarity and visual appeal, as validated by both automated evaluations and human assessments.

The authors use a comprehensive set of metrics to evaluate their framework against existing baselines, with results showing that their method outperforms PPTAgent across all preference-based and preference-independent metrics. Specifically, their approach achieves higher scores in content structure, aesthetic alignment, content quality, and overall presentation, demonstrating superior alignment with user preferences and higher general slide quality.