Command Palette

Search for a command to run...

Automated stereotactic radiosurgery planning using a human-in-the-loop reasoning large language model agent

Automated stereotactic radiosurgery planning using a human-in-the-loop reasoning large language model agent

Abstract

Stereotactic radiosurgery (SRS) demands precise dose shaping around critical structures, yet black-box AI systems have limited clinical adoption due to opacity concerns. We tested whether chain-of-thought reasoning improves agentic planning in a retrospective cohort of 41 patients with brain metastases treated with 18 Gy single-fraction SRS. We developed SAGE (Secure Agent for Generative Dose Expertise), an LLM-based planning agent for automated SRS treatment planning. Two variants generated plans for each case: one using a non-reasoning model, one using a reasoning model. The reasoning variant showed comparable plan dosimetry relative to human planners on primary endpoints (PTV coverage, maximum dose, conformity index, gradient index; all p > 0.21) while reducing cochlear dose below human baselines (p = 0.022). When prompted to improve conformity, the reasoning model demonstrated systematic planning behaviors including prospective constraint verification (457 instances) and trade-off deliberation (609 instances), while the standard model exhibited none of these deliberative processes (0 and 7 instances, respectively). Content analysis revealed that constraint verification and causal explanation concentrated in the reasoning agent. The optimization traces serve as auditable logs, offering a path toward transparent automated planning.

One-sentence Summary

Henry Ford Health and Michigan State University researchers developed SAGE, an LLM-based stereotactic radiosurgery planner using chain-of-thought reasoning to generate transparent, auditable dose plans for brain metastases. Unlike non-reasoning models, SAGE's reasoning variant demonstrated prospective constraint verification and trade-off deliberation, matching human dosimetry on key metrics while significantly reducing cochlear dose in 41 patient cases, offering a path toward clinically adoptable AI planning.

Key Contributions

- Stereotactic radiosurgery (SRS) planning for brain metastases faces clinical adoption barriers due to the opacity of conventional black-box AI systems, which lack transparency in complex scenarios requiring precise dose shaping near critical structures.

- The study introduces SAGE, an LLM-based planning agent that leverages chain-of-thought reasoning to generate auditable optimization traces, enabling systematic constraint verification and trade-off deliberation absent in non-reasoning models.

- In a retrospective cohort of 41 patients, the reasoning variant achieved comparable dosimetry to human planners on primary endpoints (PTV coverage, maximum dose, conformity, and gradient indices; all p>0.21) while significantly reducing cochlear dose (p=0.022) and demonstrating 457 constraint verifications and 609 trade-off deliberations versus near-zero instances in the non-reasoning model.

Introduction

Stereotactic radiosurgery (SRS) for brain metastases demands extreme precision due to single-session high-dose delivery near critical organs at risk, requiring steep dose gradients to spare healthy brain tissue. This complexity strains an already limited pool of specialized planners and restricts SRS access primarily to academic centers. Prior AI-driven planning approaches relied on site-specific neural networks trained on institutional data, functioning as opaque black boxes with limited transparency and poor scalability across treatment centers. These limitations hinder clinical adoption, as regulatory frameworks and radiation oncology professionals prioritize explainable decision-making. The authors address this by implementing a human-in-the-loop large language model agent using SAGE, specifically designed for iterative, reasoning-driven SRS optimization. They demonstrate that a reasoning-capable LLM—generating explicit intermediate steps for spatial reasoning and constraint validation—produces auditable decision logs while improving plan quality over non-reasoning models, directly tackling the transparency and geometric complexity barriers in SRS planning.

Dataset

- The authors use a retrospective dataset of 41 brain metastasis patients treated with single-target stereotactic radiosurgery (SRS) at their institution between 2022 and 2024, adhering to clinical guidelines (18 Gy single fraction).

- Dataset composition includes CT images, segmented anatomical structures, clinical treatment plans, and dosimetric data, all sourced from the Varian Eclipse Treatment Planning System (version 16.1).

- All plans underwent dose calculation via the AAA algorithm (version 15.6.06) with a 1.25 mm dose grid resolution; beam geometry was fixed to match original clinical configurations.

- Dose volume histograms (DVH) and photon optimization used Eclipse algorithms (versions 15.6.05), with retrospective clinical and SAGE-generated plans housed entirely within Eclipse.

- The data serves for direct comparison between clinical plans and SAGE-generated alternatives, with no training splits or mixture ratios applied—it validates plan quality within the clinical workflow.

- Processing involved strict adherence to institutional protocols, IRB approval, and consistent use of Eclipse tools without additional cropping or metadata construction beyond standard clinical outputs.

Method

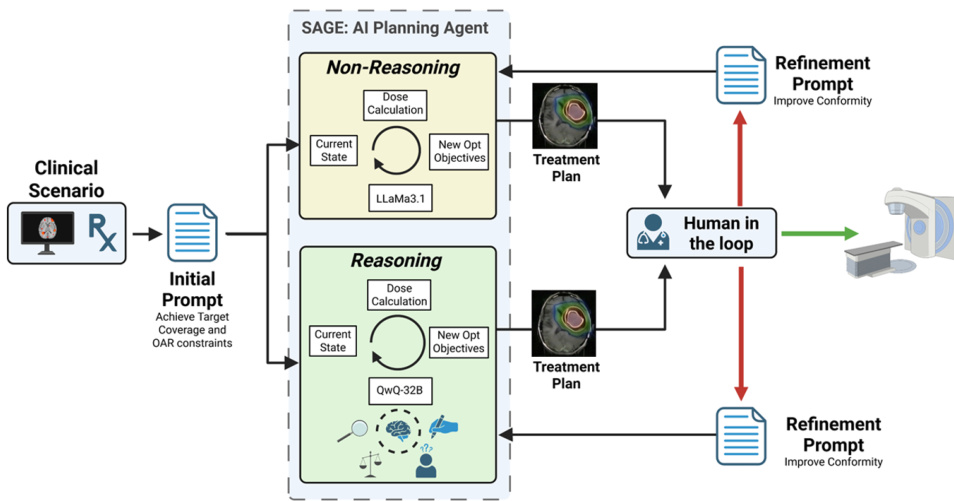

The authors leverage a dual-variant architecture within the SAGE framework to automate radiation treatment planning, integrating both non-reasoning and reasoning large language models (LLMs) within an iterative optimization loop. Upon initialization, the agent ingests the clinical scenario—including patient anatomy, target volume specifications, spatial relationships between the planning target volume (PTV) and organs at risk (OARs), and the prescription dose (18 Gy in a single fraction)—alongside the current optimizer state, which encapsulates all relevant dosimetric parameters such as DVH metrics for PTV and OARs. The agent is then prompted to achieve target coverage while strictly adhering to OAR constraints.

Refer to the framework diagram: the system bifurcates into two parallel execution paths—one for the non-reasoning model (LLaMa3.1) and one for the reasoning model (QwQ-32B). Both variants operate through identical iterative cycles comprising LLM-driven parameter adjustment, dose calculation, plan evaluation, and objective updates. Each cycle produces a new set of optimization objectives based on the current state, which feeds back into the next iteration. Optimization terminates when all clinical goals are simultaneously satisfied, or after a maximum of ten iterations, at which point the best-performing plan is selected according to deterministic stopping logic.

Following optimization, the resulting treatment plan enters a human-in-the-loop review stage, where a board-certified medical physicist evaluates whether quantitative clinical criteria are met. Plans failing conformity benchmarks are returned to SAGE with a standardized natural language refinement prompt requesting improved dose conformity while preserving target coverage and OAR constraints. This prompt is uniformly applied across all cases and model variants, ensuring consistent evaluation of the agent’s responsiveness to human feedback. The two-stage architecture thus enables assessment of both autonomous planning capability and adaptive refinement under human guidance.

Experiment

- Tested SAGE (LLM-based planning agent) on 41 brain metastasis patients for 18 Gy SRS, comparing reasoning (Qwen QwQ-32B) and non-reasoning (Llama 3.1-70B) variants against human plans

- Reasoning variant achieved equivalent primary dosimetry to clinicians: PTV coverage 96.8% (vs 96.5% clinical, p=0.21), conformity index, gradient index, and max dose (all p>0.21)

- Significantly reduced right cochlear dose versus clinical plans (p=0.022 after BH correction), with all plans meeting safety thresholds

- Upon refinement prompts, reasoning model improved conformity index more consistently (p<0.001) than non-reasoning variant (p=0.007), approaching clinical benchmarks

- Demonstrated exclusive deliberative behaviors: constraint verification (457 instances) and trade-off deliberation (609 instances) absent in non-reasoning model (0 and 7 instances)

- Produced five-fold fewer format errors (median 0 vs 3 per patient) while maintaining auditable optimization traces