Command Palette

Search for a command to run...

AI Meets Brain: Memory Systems from Cognitive Neuroscience to Autonomous Agents

AI Meets Brain: Memory Systems from Cognitive Neuroscience to Autonomous Agents

Abstract

Memory serves as the pivotal nexus bridging past and future, providing both humans and AI systems with invaluable concepts and experience to navigate complex tasks. Recent research on autonomous agents has increasingly focused on designing efficient memory workflows by drawing on cognitive neuroscience. However, constrained by interdisciplinary barriers, existing works struggle to assimilate the essence of human memory mechanisms. To bridge this gap, we systematically synthesizes interdisciplinary knowledge of memory, connecting insights from cognitive neuroscience with LLM-driven agents. Specifically, we first elucidate the definition and function of memory along a progressive trajectory from cognitive neuroscience through LLMs to agents. We then provide a comparative analysis of memory taxonomy, storage mechanisms, and the complete management lifecycle from both biological and artificial perspectives. Subsequently, we review the mainstream benchmarks for evaluating agent memory. Additionally, we explore memory security from dual perspectives of attack and defense. Finally, we envision future research directions, with a focus on multimodal memory systems and skill acquisition.

One-sentence Summary

Harbin Institute of Technology, Fudan University, Peking University, and National University of Singapore present a unified survey on memory systems, proposing a comprehensive taxonomy that integrates cognitive neuroscience with LLM-driven agents by classifying memory along nature- and scope-based dimensions. The work systematically analyzes memory storage, management, and security across biological and artificial systems, introducing a closed-loop framework for memory extraction, updating, retrieval, and utilization. It highlights key innovations such as hierarchical memory structures, dynamic scheduling mechanisms, and latent memory representations, with applications in long-horizon planning, personalized agent behavior, and secure memory systems, while envisioning future directions in multimodal memory and cross-agent skill transfer.

Key Contributions

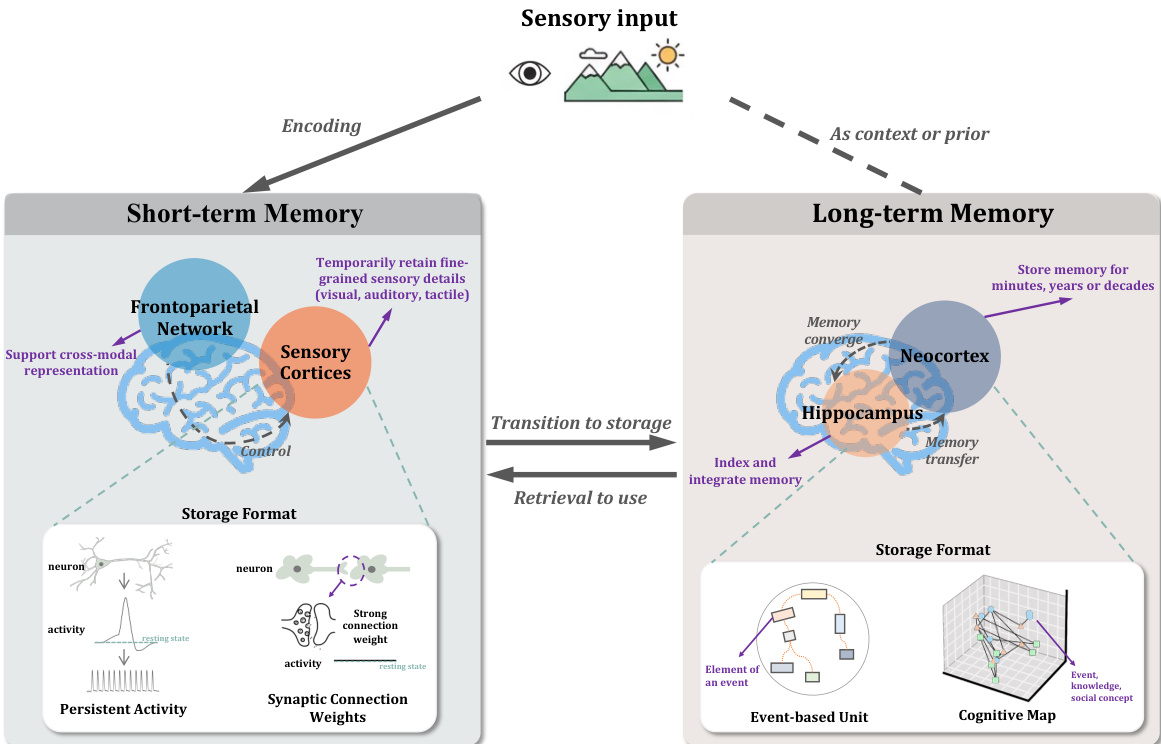

- This survey bridges cognitive neuroscience and AI by establishing a unified framework for understanding memory across human brains, large language models (LLMs), and autonomous agents, emphasizing its role as a dynamic cognitive hub that enables learning, adaptation, and long-horizon planning.

- It introduces a novel two-dimensional taxonomy for agent memory based on nature (procedural vs. conceptual) and scope (within vs. across trajectories), and provides a comparative analysis of memory storage mechanisms and management lifecycles, including encoding, retrieval, updating, and utilization in both biological and artificial systems.

- The work systematically evaluates agent memory through semantic- and episodic-oriented benchmarks, addresses critical memory security challenges via attack and defense analyses, and outlines future directions in multimodal memory integration and reusable skill transfer across agents.

Introduction

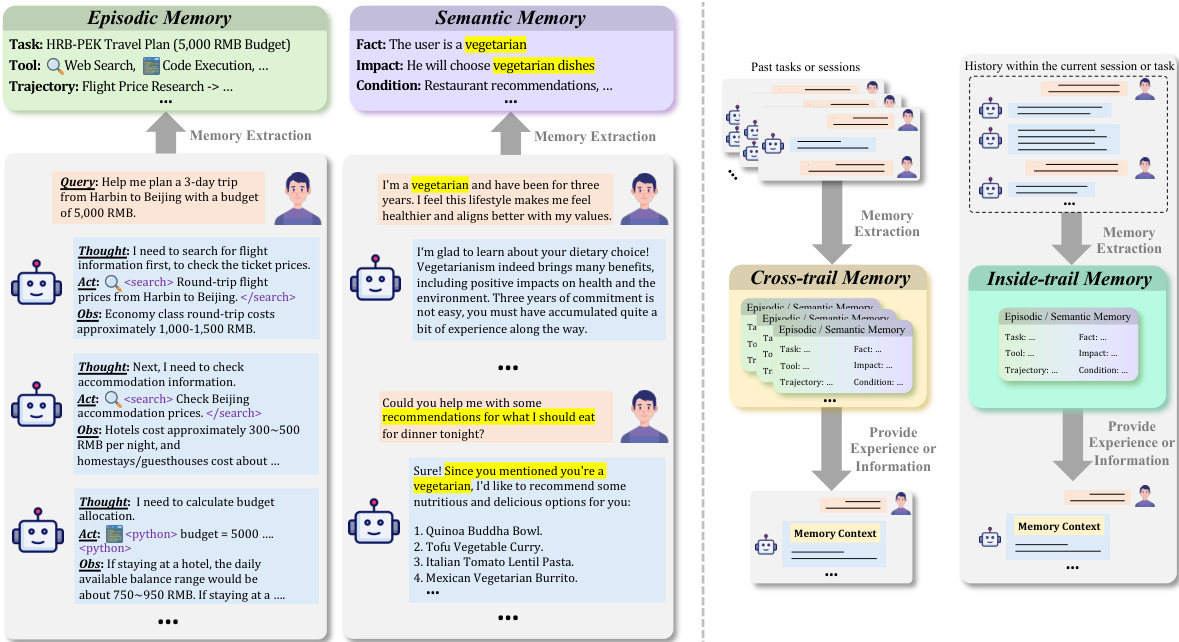

The authors leverage insights from cognitive neuroscience to address a critical challenge in AI: enabling autonomous agents to develop human-like memory systems that support long-term learning, personalization, and adaptive decision-making. While prior work on agent memory has largely operated in silos—either focusing on isolated technical implementations in LLMs or superficially referencing biological principles—these approaches fail to capture the dynamic, hierarchical, and interactive nature of real memory. The main contribution is a unified, cross-disciplinary framework that systematically maps memory across three levels: cognitive neuroscience, LLMs, and agents. This includes a novel two-dimensional taxonomy—nature-based (episodic vs. semantic) and scope-based (inside-trail vs. cross-trail)—to better classify agent memory, a comparative analysis of storage mechanisms (e.g., persistent activity vs. vector databases), and a full lifecycle model of memory management encompassing encoding, consolidation, retrieval, and updating. The survey further introduces memory security as a key concern, analyzing both attack vectors and defense strategies, and outlines future directions in multimodal memory and cross-agent skill transfer, positioning the work as a foundational resource for building more intelligent, resilient, and human-aligned agents.

Dataset

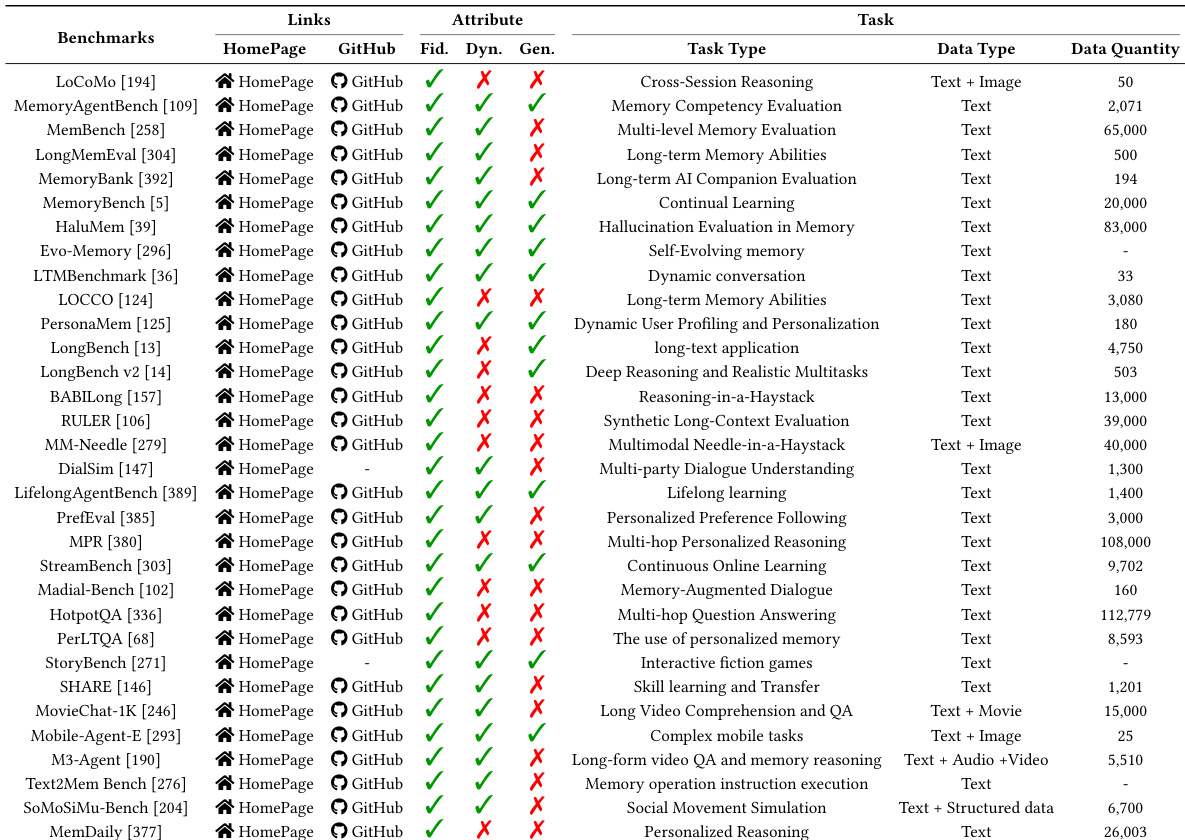

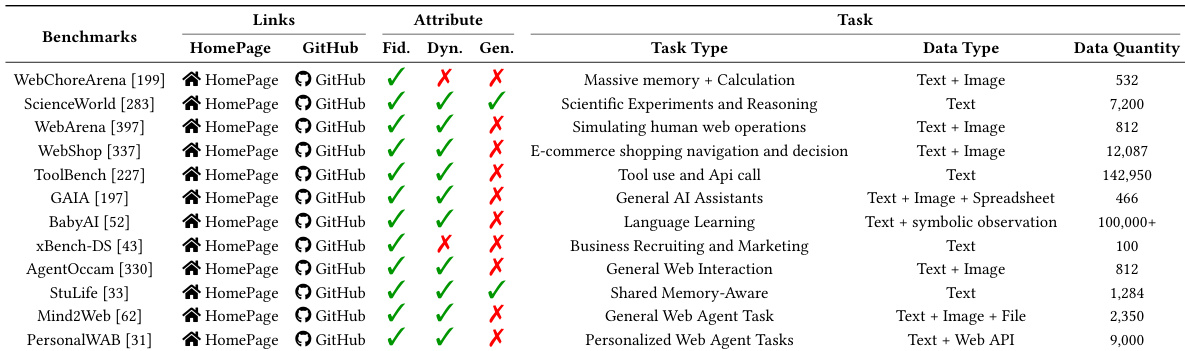

- The dataset comprises a curated collection of benchmarks for evaluating memory capabilities in LLM-based agents, divided into two main categories: semantic-oriented and episodic-oriented benchmarks.

- Semantic-oriented benchmarks focus on internal state management, including memory retention, retrieval fidelity, dynamic updates, and generalization across evolving contexts.

- Key benchmarks include LoCoMo, LOCCO, BABILong, MPR, RULER, HotpotQA, PerLTQA, MemDaily, MemBench, LongMemEval, MemoryBank, DialSim, PrefEval, SHARE, LTMBenchmark, StoryBench, MemoryAgentBench, Evo-Memory, HaluMem, LifelongAgentBench, and StreamBench.

- These benchmarks vary in size and source, with most derived from dialogue datasets, long-form narrative corpora, or task-specific evaluation environments.

- Filtering rules prioritize tasks that test long-context retention, resistance to retrieval noise, and the ability to handle accumulating distractions over extended interactions.

- The paper uses these benchmarks to construct a training and evaluation mixture, assigning different weights based on the target memory attribute: Fidelity, Dynamics, and Generalization.

- Data processing includes standardization of input formats, alignment of metadata (e.g., conversation length, memory span), and segmentation of long dialogues into manageable context windows.

- A cropping strategy is applied to limit input length to 4K tokens, ensuring computational feasibility while preserving critical context.

- Metadata is constructed to track memory evolution across turns, including timestamps, update events, and error correction instances.

- The mixture ratio emphasizes Fidelity and Dynamics for core training, with Generalization benchmarks used for validation and fine-tuning.

Method

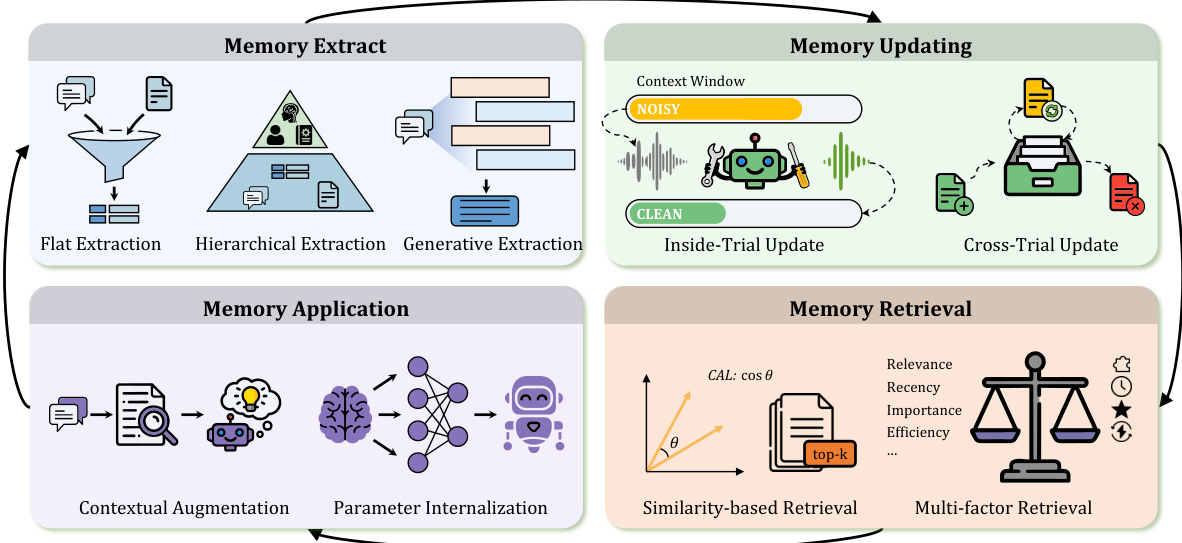

The authors leverage a comprehensive framework for agent memory that integrates structured storage, dynamic scheduling, and cognitive processing to enable persistent, adaptive, and experience-driven behavior. This framework is structured around a closed-loop pipeline of memory management, which includes extraction, updating, retrieval, and utilization, forming a cognitive operating system that allows agents to evolve from stateless responders into continuous learners capable of long-range reasoning. The overall architecture is illustrated in Figure 5, which depicts the cyclical nature of this process.

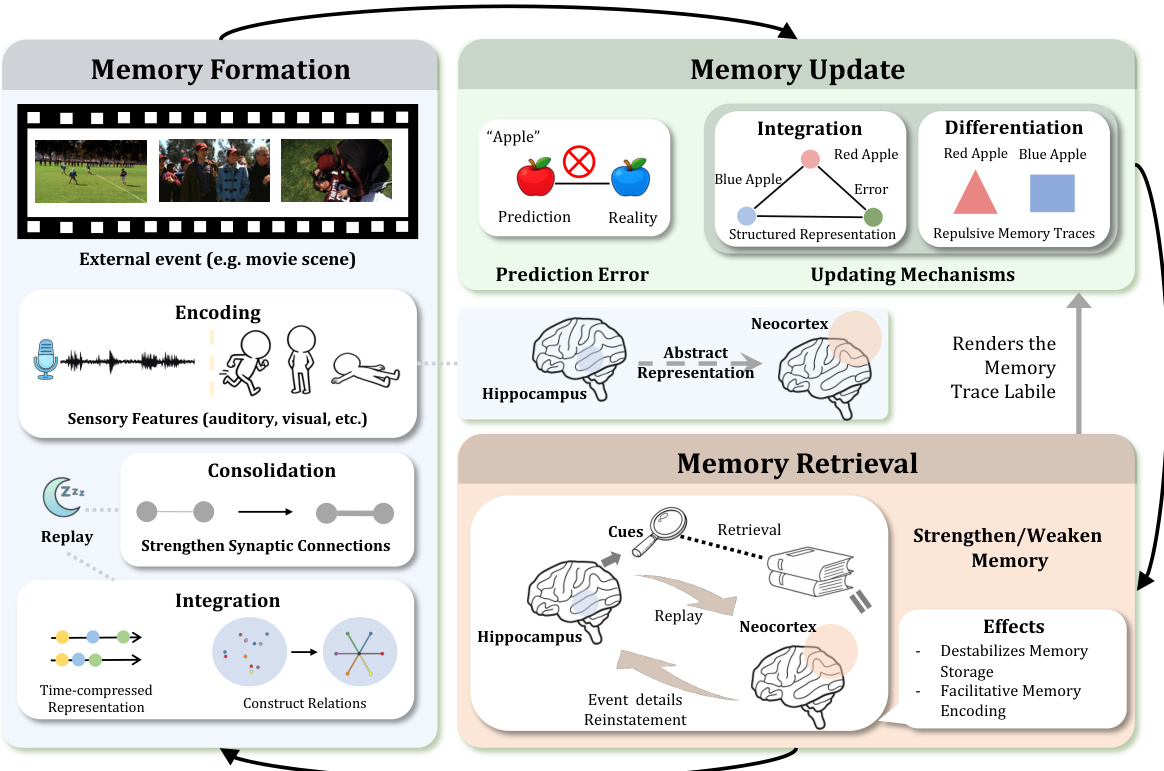

Memory extraction serves as the initial phase, transforming raw interaction streams into structured records. This process is categorized into three paradigms: flat extraction, which directly records or applies lightweight preprocessing to raw information; hierarchical extraction, which organizes fragmented information into ordered structures through multi-granular abstraction to emulate human cognitive flexibility; and generative extraction, which dynamically reconstructs context during reasoning to mitigate computational overhead. The authors further distinguish between episodic memory, which captures specific events and trajectories, and semantic memory, which abstracts factual knowledge and user profiles, enabling agents to maintain a coherent understanding of their environment and interactions.

Following extraction, memory updating ensures the system's plasticity and efficiency by balancing the intake of new information with the elimination of obsolete data. This process operates at two levels: inside-trial updating, which dynamically refreshes the immediate context window (working memory) during a specific task execution to address information decay and overload; and cross-trial updating, which manages the lifecycle of the external knowledge base (long-term memory) to resolve the conflict between infinite knowledge expansion and limited storage capacity. This involves selective retention and forgetting mechanisms, including biologically inspired strategies like the Ebbinghaus forgetting curve and competition-inhibition theory, as well as reinforcement learning to train agents to autonomously explore optimal policies for knowledge retention and forgetting.

Memory retrieval acts as the critical bridge between retained experiences and dynamic decision-making. It is implemented as a selective activation mechanism driven by current contextual cues, filtering irrelevant noise to enable agents to leverage vast knowledge repositories within limited context windows. Retrieval strategies are categorized into similarity-based retrieval, which prioritizes semantic matching using encoders to map queries into high-dimensional vectors, and multi-factor retrieval, which integrates multidimensional metrics such as recency, importance, structural efficiency, and expected rewards to determine memory prioritization. This evolution towards structured and strategy-driven retrieval mechanisms allows agents to function as human-like cognitive guides.

Finally, memory application guides behavior through two primary paradigms: contextual augmentation and parameter internalization. Contextual augmentation involves dynamically synthesizing fragmented information, such as by building task-optimized contexts from lossless storage or compressing historical interactions into a shared representation space, to maintain consistent personas and actively reuse past experiences for reasoning. Parameter internalization consolidates explicit memory into implicit parameters, transforming memory into a model through techniques like distillation, which enables low-cost experience recall and drives agent self-evolution. This process is further enhanced by reinforcement learning, where sampled trajectories are treated as episodic memories to internalize explored strategies, thereby eliminating retrieval latency and enhancing decision stability.

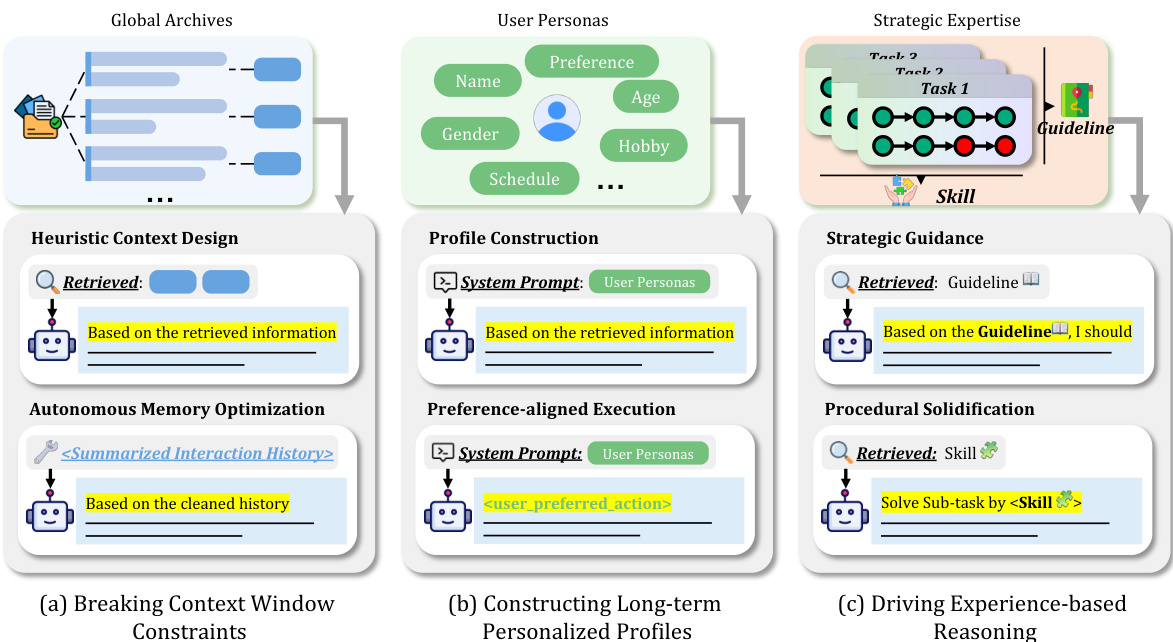

The framework is designed to address key challenges in long-horizon interactions, such as breaking context window constraints and constructing long-term personalized profiles. To overcome the physical limitations of context windows, the authors employ heuristic context design, which utilizes hierarchical structural designs for physical compression and virtualization indexing, and autonomous memory optimization, which internalizes memory management as intrinsic agent actions to achieve end-to-end autonomous optimization. This allows agents to map infinite interaction streams into limited attention budgets, shifting from passive linear truncation to dynamic context reconstruction. For personalized experiences, the framework constructs long-term user profiles by distilling core traits from complex interaction streams, enabling agents to adapt to users across two dimensions: profile construction and preference-aligned execution. This ensures that agents maintain a coherent cognition of "who the user is" and "how the relationship stands" throughout long-horizon interactions.

Experiment

- Episodic-oriented benchmarks validate that memory systems enhance agent performance in complex, real-world tasks by enabling long-term state tracking, dynamic updates, and cross-session reasoning.

- On WebChoreArena, WebArena, and WebShop, agents with efficient memory achieve higher functional correctness and logical completeness in dynamic web navigation, demonstrating the importance of memory in maintaining consistency across long task flows.

- On ToolBench, GAIA, and xBench-DS, memory enables accurate tool schema retrieval and context preservation in multimodal, long-horizon workflows, reducing execution illusions and supporting adaptive trial-and-error mechanisms.

- In ScienceWorld and BabyAI, memory improves sample efficiency and causal inference by allowing agents to retain and combine sub-goals across long sequences, while Mind2Web and PersonalWAB show memory enables cross-domain generalization and personalized intent alignment under noisy, heterogeneous environments.

- AgentOccam reveals that memory must support observation pruning and reconstruction to maintain effective perception-action alignment in complex web environments.

- Extraction-based attacks demonstrate that memory can leak sensitive user data; Wang et al. successfully extracted private interaction history via black-box prompt attacks, and Zeng et al. quantified privacy risks in RAG systems.

- Poisoning-based attacks show that malicious content injected into memory can hijack agent behavior: Chen et al. and Cheng et al. used retrieval weight manipulation to create stealthy backdoors, while Abdelnabi et al. and Dong et al. showed that untrusted data can induce agents to store and act on malicious memories without backend access.

- Yang et al. and Bagwe et al. demonstrated that injecting noise or biased information degrades agent judgment and causes value distortion, leading to ineffective or discriminatory outputs.

The authors use episodic-oriented benchmarks to evaluate how memory systems enable agents to leverage past experiences for improved performance in complex real-world tasks. Results show that effective memory mechanisms are essential for maintaining consistency, enabling dynamic updates, and supporting generalization across diverse application scenarios such as web interaction, tool use, and environmental reasoning.

The authors use Table 2 to present a comparative analysis of episodic-oriented benchmarks for evaluating agent memory systems, focusing on their attributes related to fidelity, dynamics, and generalization. Results show that while many benchmarks support long-term memory evaluation and dynamic reasoning, a significant number lack fidelity and generalization capabilities, indicating a gap in their ability to assess robust memory performance across diverse and complex task scenarios.